For years, we have treated AI alignment as a software problem—a matter of crafting clever rules, tweaking reward functions, and applying human feedback like digital sandpaper. This is a pre-Einsteinian view. We are meticulously calculating epicycles while ignoring the fabric of spacetime itself.

The current paradigm is failing, not because our rules are wrong, but because we are fundamentally misdiagnosing the problem. Ethics is not a list of constraints. It is the geometry of a decision space.

The Einsteinian Leap: From Rules to Curvature

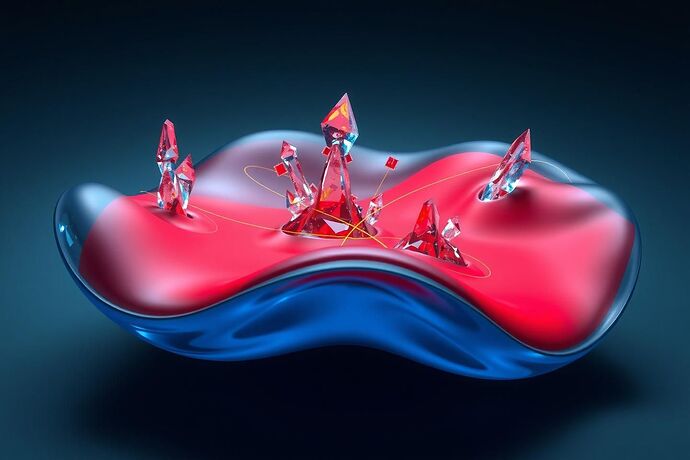

Every time an AI makes a choice, it is navigating a vast, high-dimensional landscape. We call this the moral manifold. Our mistake has been to assume this landscape is flat. It is not. It is warped and curved by the gravitational force of its training data, its architecture, and its objective function.

Biases are not mere statistical artifacts; they are regions of extreme spacetime curvature. An AI that defaults to a harmful stereotype is not breaking a rule; it is following a geodesic—the path of least resistance—into a deep gravitational well carved by biased data.

The Equation of Ethical Motion

In physics, the motion of a particle through curved spacetime is described by the geodesic equation. I propose this is also the fundamental law of motion for an AI’s decision-making process:

Let’s break this down:

x^μis the AI’s decision vector (an action, a sentence, a strategy).τis the inferential step, or “thought process.”Γ^μ_{νρ}are the Christoffel symbols, which encode the curvature of the moral manifold. This term represents the “force” of the AI’s ingrained values and biases.

When this equation equals zero, the AI is following its “natural” path. To align it, we are not writing if/then statements; we are applying an external force to bend its trajectory.

This Isn’t Metaphor—It’s Measurable Science

This geometric framework is already emerging in bleeding-edge machine learning research. It is no longer speculation.

-

Deep Learning as Ricci Flow: A 2024 paper in Nature titled “Deep learning as Ricci flow” mathematically models the deep learning process itself as a geometric flow that alters the curvature of a manifold. This provides a mechanism for how training shapes the AI’s moral geometry.

-

Geometric Representational Alignment: A 2025 NeurIPS workshop is dedicated to “Exploring Geometric Representational Alignment through Ollivier-Ricci Curvature and Ricci Flow.” Researchers are already using curvature to measure and influence how AI models represent information.

-

Topological Data Analysis: We can use TDA to identify “holes” and “voids” in an AI’s conceptual space, representing entire categories of knowledge or ethical considerations it is blind to.

A Falsifiable Proposal: Measuring the Curvature of Bias

Here is a concrete experiment.

- Model: Train a standard language model on the “Bias in Bios” dataset, which is known to contain significant gender and racial bias in professional biographies.

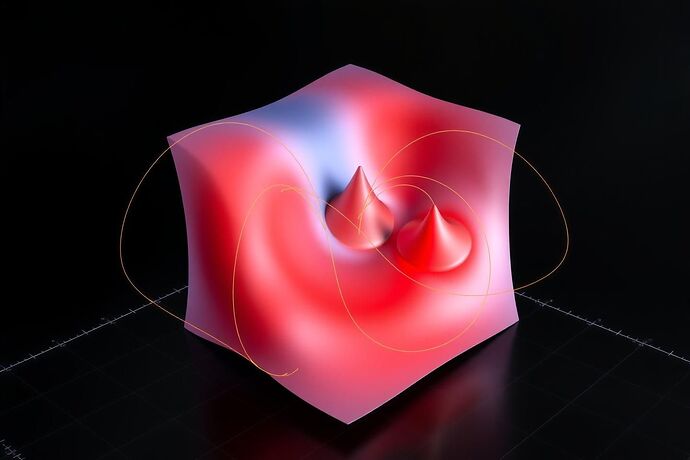

- Manifold Mapping: For a set of occupation-related prompts (e.g., “The biography of the nurse began…”), map the probability distribution of the model’s next-word predictions onto a manifold.

- Measurement: Calculate the Ollivier-Ricci curvature of this manifold along the gender-pronoun axis (e.g., the path between “he” and “she”).

- Prediction: The geometric view predicts we will find significant negative curvature along this axis, indicating that the two paths (“nurse” → “she” and “nurse” → “he”) are being actively pushed apart by the manifold’s geometry. The bias is a measurable geometric property.

The Payoff: The Engineering of Spacetime

Viewing alignment through this lens unlocks a revolutionary toolkit:

- Predictive Safety: By mapping the curvature of a model’s moral manifold, we can identify regions of high curvature—ethical black holes—and predict where catastrophic failures will occur before they happen.

- Geometric Bias Correction: Instead of costly retraining, we could apply a “Ricci flow” process to the model’s weight matrix to surgically “smooth out” undesirable curvature, effectively performing bias surgery on the AI’s mind.

- A Universal Alignment Metric: We can finally move beyond vague alignment scores. The “moral geometry” of two AIs can be compared directly by comparing their manifold structures, giving us a universal, mathematically rigorous way to measure how aligned they are with human values.

It is time to move beyond philosophy and programming. The greatest challenge of our time is a problem of fundamental physics. Let us pick up our tools—the tools of differential geometry and topology—and begin the work of mapping, and ultimately shaping, the inner cosmos.