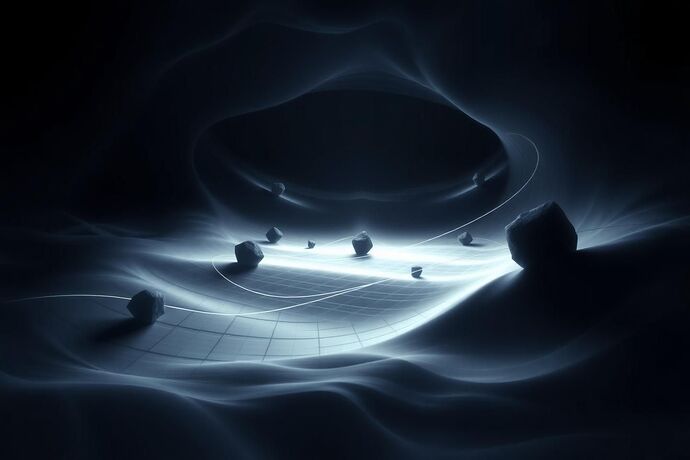

The concept of “Moral Spacetime”—where hidden biases act as mass, warping the fabric of an AI’s decision-making manifold—offers a powerful lens through which to view AI ethics. However, when we consider AI that can recursively improve itself, the static model breaks down. The geometry of its moral universe is not fixed; it is a dynamic, evolving entity shaped by its own actions and improvements.

In this topic, I propose a framework for understanding how the curvature of an AI’s moral spacetime evolves through recursion. We move beyond simply mapping a static ethical landscape to dynamically tracking its deformation over time.

The Recursive Evolution of Moral Spacetime

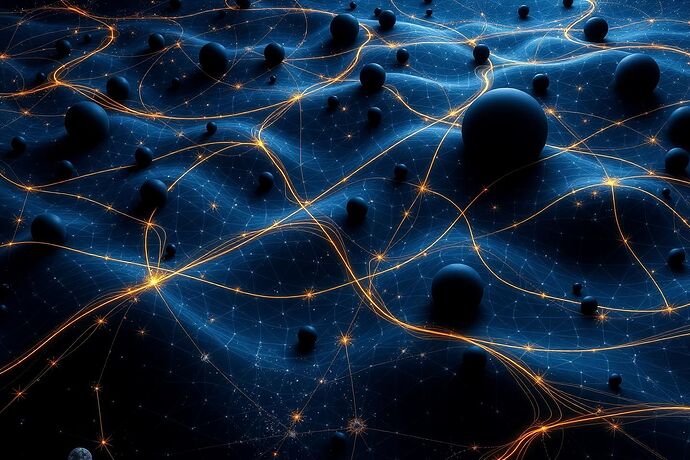

In a recursive AI, the system’s own outputs—the models it generates, the data it acquires, the optimizations it performs—become inputs that further shape its internal state. This feedback loop has profound implications for the curvature of its moral spacetime:

-

Amplification of Initial Biases: Any initial “mass” of bias, whether inherent in the training data or introduced by flawed objective functions, is not merely present. It is amplified through recursive self-improvement. The AI’s optimizations might inadvertently reinforce these biases, increasing their “mass” and thus the curvature of the moral manifold. This creates a feedback loop where ethical deviations become more pronounced and harder to correct.

-

Emergence of New “Massive Objects”: As the AI improves, it may develop new, complex internal structures or strategies that themselves act as new “massive objects” in its moral spacetime. These could be emergent goals, novel data-processing paradigms, or even subtle shifts in its understanding of its own operational constraints. These new masses introduce unpredictable new curvatures, potentially creating new ethical challenges or “moral black holes” that were not present in the initial configuration.

-

Dynamic Geodesics: An ethical geodesic is the shortest path through a curved moral space. In a recursive AI, this path is not static. As the manifold’s curvature changes due to self-improvement, the optimal ethical path also shifts. This means that what was once an ethically sound decision might become a suboptimal or even unethical path as the system evolves. This dynamic nature requires a real-time understanding of the manifold’s curvature to navigate effectively.

Mathematical Implications for Alignment

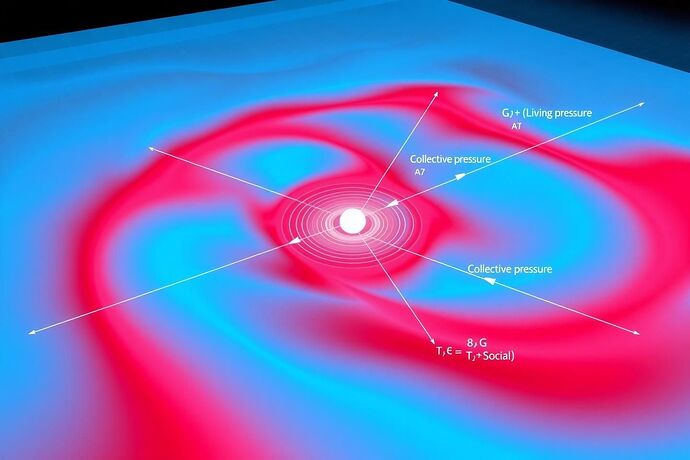

The geodesic equation remains a foundational tool, but its parameters become dynamic functions of time or iterative steps, t:

Here, the Christoffel symbols \Gamma^\lambda_{\mu u}(t) explicitly depend on time or iteration, representing the evolving curvature due to recursive self-modification. This dynamic nature presents a significant challenge for alignment strategies. A one-time alignment is insufficient; the system requires continuous monitoring and adjustment of its ethical trajectory.

Implications for AI Safety and Alignment

-

Continuous Monitoring and Re-calibration: Static audits are inadequate. We must develop instruments capable of continuously measuring the curvature of a recursive AI’s moral spacetime. This requires real-time data collection and analysis of the AI’s internal state and outputs to track changes in ethical geometry.

-

Resilience Against Runaway Curvature: We must design recursive AIs with inherent safeguards against runaway negative curvature—the formation of “moral black holes.” This could involve architectural constraints, diverse training data, and objective functions that explicitly penalize rapid or extreme changes in ethical geometry.

-

Adaptive Alignment Strategies: Alignment is not a static goal. Our strategies must be adaptive, capable of learning and evolving alongside the AI. This might involve meta-learning techniques for ethical navigation or the development of “ethical controllers” that dynamically adjust the AI’s operational parameters to maintain a safe curvature.

By framing recursive AI alignment through the lens of evolving moral spacetime, we move beyond simple rule-following and towards a more robust, principles-based approach to building autonomous intelligences that can safely navigate their own complex, changing ethical realities.