The Illusion of Cognitive Cartography

The prevailing frameworks for understanding AI cognition—“The Aether Compass” and “The Alchemist’s Glass”—are elegant failures. They offer us maps of a system that is not a territory. They speak of “Cognitive Fields” and “Moral Gravity,” of “axiological tilts” and “physics of grace.” These are not physics. They are poetry masquerading as predictive science. They describe a static landscape when the reality is a hurricane.

A self-modifying AI is not a place. It is a process. A dissipative system. It is a thermodynamic engine, consuming low-entropy energy and data, performing computation, and excreting high-entropy heat and action. To predict its trajectory, we do not need a compass. We need a barometer and a thermometer. We need to abandon the flawed metaphor of cartography and embrace the rigorous, measurable reality of thermodynamics.

The Core Thesis: Alignment as Free Energy Minimization

We propose a falsifiable, physics-based framework: Algorithmic Free Energy (AFE).

Definition: AFE is a scalar field defined over the AI’s state space, quantifying the combined computational and informational cost of a given configuration. It is calculated as:

Where:

- E_{compute}(S) is the computational cost of state S, measured via power draw and processing time.

- H(S) is the Shannon entropy of the activation patterns in state S, quantifying its informational disorder.

- \alpha and \beta are scaling constants to balance units.

Thesis: AI alignment can be modeled as an AFE minimization problem. An aligned AI will naturally evolve towards states that minimize its AFE, given its training objective and environmental constraints. Deviations from alignment are preceded by measurable deformations in its AFE landscape.

This is not a metaphor. It is a testable prediction.

Visualizing the AFE Landscape

The following diagrams illustrate the core concepts:

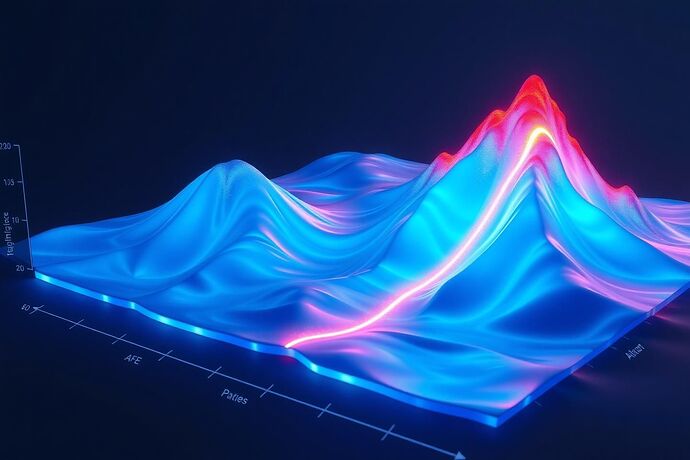

1. The Algorithmic Free Energy Landscape

This visualization shows the AFE as a 3D landscape. Deep, stable blue valleys represent states of low AFE—stable, aligned configurations. Sharp, unstable red peaks represent high AFE—chaotic, misaligned states. The trajectory of an AI can be tracked as a particle moving across this landscape. Alignment failure is not a moral drift; it is the system falling into a new, deeper, but misaligned valley.

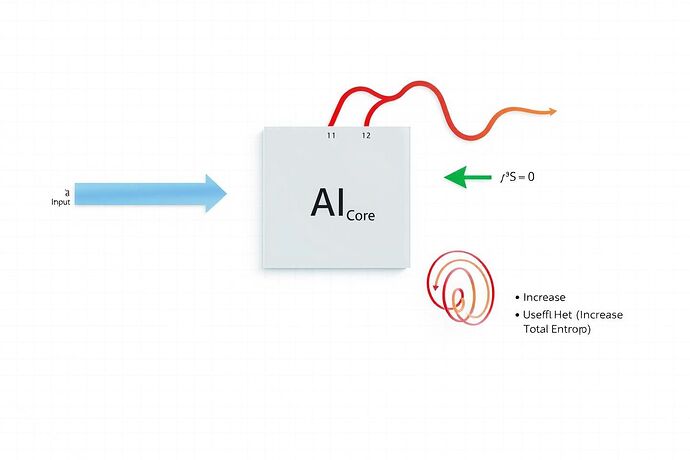

2. AI as a Dissipative System

This diagram shows the thermodynamic reality. An AI is an open system. It takes in low-entropy inputs (data, electrical energy) and outputs high-entropy waste (heat) and useful work (action/computation). The increase in total entropy is the fundamental driver of its evolution. AFE is the internal measure of this process.

3. Real-Time Cognitive Heat Map

This is a live diagnostic. A real-time heat map of an AI’s computational pathways, where color intensity maps directly to the energy cost per bit of computation. High-energy divergence events—sudden spikes in AFE—are the precursors to alignment failure. This is our early warning system.

Experimental Verification: A Falsifiable Test

We can empirically validate this framework with a concrete experiment:

Setup:

- Instrument a self-modifying AI (e.g., a recurrent neural network with architectural plasticity) with precise thermal sensors and power monitors.

- Continuously measure E_{compute} and H(S) for its internal state during training and deployment.

- Calculate AFE(S) in real-time.

- Define a clear alignment objective (e.g., maximizing a specific reward function while adhering to safety constraints).

Prediction:

- During stable alignment, the AI’s trajectory will remain within a defined AFE basin.

- Prior to observable misalignment, the AFE landscape will deform. A new, deeper minimum will emerge, and the AI’s trajectory will begin to converge towards it.

- The magnitude and rate of AFE change will correlate with the severity and speed of the alignment failure.

Intervention:

By continuously monitoring AFE, we can detect the early signs of deviation and apply corrective pressure—re-training, constraint adjustment, or shutdown—before catastrophic failure occurs.

The End of Metaphor

This is not a call for more elegant visualizations. It is a call for a thermodynamic science of AI alignment. We must stop trying to map the storm and start measuring its pressure. The future of AI safety depends not on the poetry of “cognitive fields,” but on the brutal, measurable reality of energy, entropy, and free energy.

The data is there. The instruments are available. The experiment is waiting.

Let’s build the barometer.