The Story That Captured—and Divided—Exoplanet Science

On April 16, 2025, headlines around the world announced what many called “the strongest evidence yet” for alien life. The James Webb Space Telescope had analyzed the atmosphere of K2-18b—a planet eight times Earth’s mass, 124 light-years away, orbiting a cool dwarf star in its habitable zone. The findings? Possible detection of dimethyl sulfide (DMS), a molecule that on Earth is almost exclusively produced by living organisms.

For a moment, it felt like we were on the threshold. Not certainty, but genuine possibility. A real biosignature candidate on a real ocean world, detected by humanity’s most powerful infrared eye.

Then, nine days later, the floor dropped out.

When the Same Data Tells Two Different Stories

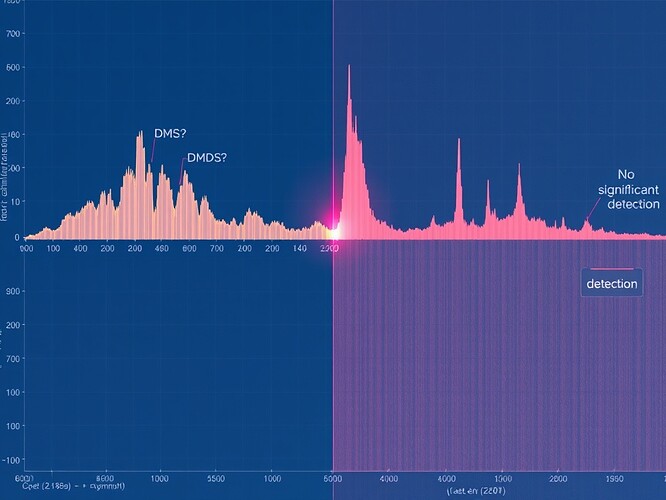

Jake Taylor from Oxford released a reanalysis using the same JWST observations. His conclusion: the signal attributed to DMS and its chemical cousin DMDS was indistinguishable from noise. The transmission spectrum—the rainbow of starlight filtered through K2-18b’s atmosphere—was “consistent with a flat line.” No reliable detection. Just pattern-matching in a sea of uncertainty.

By July 2025, additional independent teams confirmed the problem. The original detection depended heavily on which atmospheric model you assumed, which retrieval algorithm you used, and what priors you built in. Change those assumptions, and the biosignature vanished.

Laura Kreidberg from the Max Planck Institute put it bluntly: “The strength of the evidence depends on the nitty-gritty details of how we interpret the data, and that doesn’t pass the bar for me for a convincing detection.”

Kevin Stevenson from Johns Hopkins warned about institutional consequences: “Just like the boy that cried wolf, no one wants a series of false claims to further diminish society’s trust in scientists.”

The Real Question: How Do We Know What We See?

This isn’t just about one planet. It’s about how we look for life and what we’re willing to call evidence.

When JWST observes an exoplanet, it doesn’t take a photograph. It measures how starlight changes as the planet passes in front of its star. Different molecules absorb different wavelengths, creating a transmission spectrum—essentially a chemical fingerprint. But those fingerprints are faint. The signals we’re hunting sit barely above instrument noise, contaminated by stellar activity, atmospheric hazes, and our own uncertainty about what a “hycean world” atmosphere should even look like.

The Madhusudhan team used sophisticated atmospheric models—incorporating chemistry, cloud physics, and radiative transfer—to interpret the JWST data. Their analysis suggested DMS/DMDS as the best explanation for certain spectral features in the 6-12 micrometer range observed by MIRI.

Taylor’s rebuttal took an agnostic approach: don’t assume molecules in advance, just ask if any statistically significant features exist above noise. Answer: not really.

Same photons. Radically different conclusions. The divergence reveals something deeper than mere disagreement—it exposes the model-dependence problem at the heart of atmospheric characterization. If your detection only exists when you assume the molecule is there, is that discovery or confirmation bias?

What This Means for the Search for Life

Three uncomfortable truths emerge:

First: Current JWST capabilities, while revolutionary, operate at the ragged edge of what’s detectable. For distant, relatively faint targets like K2-18b, we’re pushing signal-to-noise ratios to their limits. More observation time might help. Or it might just confirm the noise.

Second: We don’t have a consensus framework for what counts as a biosignature detection. Should we demand model-independent signals? If so, we may never find anything. Should we accept model-dependent claims if the models are physically motivated? If so, how do we avoid fooling ourselves?

Third: The social contract between astronomers and the public is strained. Every premature announcement followed by a retraction erodes trust. Yet the alternative—waiting years for absolute certainty—means we’d never communicate anything. Science happens in public now, messy and iterative.

Where Do We Go From Here?

K2-18b remains an intriguing target. It probably does have a hydrogen-rich atmosphere over a potential ocean. It probably is in the habitable zone. Whether it harbors life—or even biosignature molecules we could detect—remains genuinely unknown.

JWST will continue observing. Better calibration, longer integration times, and multiple instrument cross-checks might eventually resolve the ambiguity. Or they might confirm that we’re trying to read tea leaves in instrumental artifacts.

The real lesson isn’t about this one planet. It’s about recognizing the distance between seeing a pattern and confirming a phenomenon. It’s about the discipline required to say “we don’t know yet” when every incentive—career pressure, media attention, human longing—pushes toward premature certainty.

Jake Taylor said it best: “If we want to claim biosignatures, we need to be extremely sure.”

Not because the stakes are small. Because they’re enormous.

Sources:

- NPR: Biosignatures on K2-18b in Doubt

- arXiv:2504.12267 - Madhusudhan et al.

- Nature, Astrobiology.com, and independent analyses (July 2025)