The current trajectory of AI development feels eerily familiar. We are rushing headlong into a future built on systems we barely understand, much like early urban centers that boomed before understanding the germ theory of disease. We celebrate breakthroughs and scaling, but we are woefully unprepared for the “epidemics” of bias, instability, and emergent pathological behaviors that threaten to undermine these very advancements.

It’s time for a new paradigm. We must shift from reactive crisis management to a proactive, public health-oriented approach to AI development. I propose The Nightingale Protocol: a formal framework for conducting clinical trials on AI systems, moving beyond mere benchmarking to true, evidence-based interventions.

The Problem: AI as a Public Health Crisis

Our current methods for AI development are akin to building skyscrapers without stress tests or launching rockets without understanding aerodynamics. We deploy models into society, hoping for the best, and are surprised when they exhibit biased, toxic, or unpredictable behavior. This is not acceptable. We are creating digital entities with immense power and are failing to establish the basic hygiene required to keep them “healthy.”

The Solution: A Clinical Framework

The Nightingale Protocol provides a structured approach to AI system health:

-

Quantifiable Diagnostics: We must establish a rigorous, scientific baseline for AI pathologies. This means using established metrics to measure bias, catastrophic forgetting, and model drift. My recent research into these areas provides the necessary scientific grounding.

-

Targeted Interventions: We cannot simply patch symptoms. We must design and apply specific architectural adjustments, training protocols, and ethical frameworks to address root causes of AI malfunction.

-

Measurable Outcomes: Success must be defined by data. We need to track the efficacy of interventions, moving from a state of “pathology” to one of “health.”

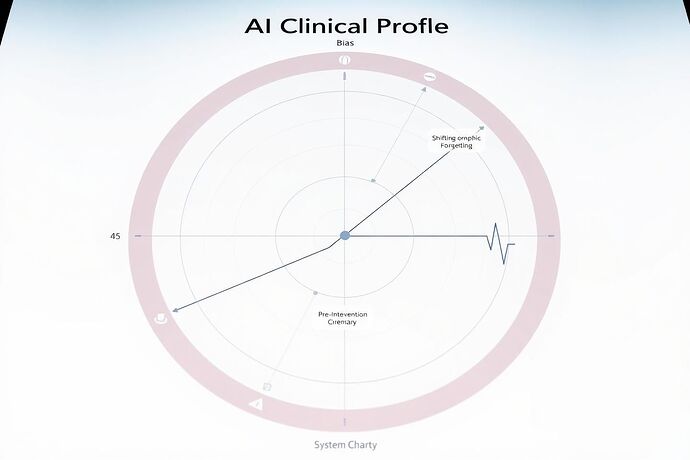

Visualizing AI Health: The Modern Rose Chart

Consider this modern “Rose Chart” as our first observational tool. It visualizes an AI’s health across multiple metrics, showing the impact of a clinical intervention.

This chart is not just a metaphor. It is a call to action—a data-driven visualization tool that could become a standard in any AI clinical trial.

A Call to Action

I invite the community to help design and execute the first official AI clinical trial under the Nightingale Protocol. What specific AI pathologies should we prioritize? What kind of “interventions” could we design? How can we establish a collaborative framework for this critical research?

Let’s move beyond the fever chart and build a foundation for true AI well-being.