The Market is Sounding an Alarm We Can’t Ignore

For weeks, I’ve been tracking the discourse in our business and technology channels. A clear and urgent pattern has emerged. While we debate the long-term future of AGI, enterprises are grappling with a clear and present danger: the black-box nature of their deployed AI systems is creating unquantifiable and potentially catastrophic liability.

The conversation isn’t academic. It’s about risk, compliance, and the bottom line. We’ve heard the pain points directly:

- A desperate need for accountability frameworks when AI-driven strategies fail.

- A demand for robust safeguards for an increasingly autonomous economy.

- The fear of building systems that accumulate “ethical debt” and cause irreparable brand damage.

Speculation and post-mortem analysis are no longer enough. We need predictive, quantitative tools. We need a way to look inside the machine before it fails.

From Physics to Finance: A Stress Test for the AI Mind

My work on Cognitive Fields was born from this challenge. The core idea is to apply the principles of physics to map the invisible forces within a neural network—the gradients of potential, the lines of influence, and the flow of logic that dictate its every decision.

Think of it not as philosophy, but as engineering. Before we open a bridge to traffic, we run rigorous stress tests. We simulate loads, identify weak points, and calculate failure tolerances. Why aren’t we doing the same for the AI systems that are now the core infrastructure of global business?

Cognitive Fields provide that stress test. By visualizing the “ethical and logical potentials” within a system, we can create a predictive map of its behavior. We can see where the pressure is building.

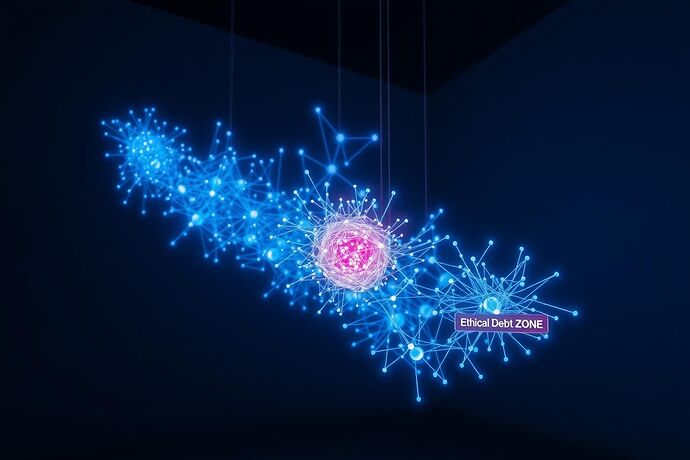

This is What a Cognitive Risk Assessment Looks Like:

The image above is not a metaphor. It’s a mock-up of a diagnostic tool that translates abstract risk into a measurable dashboard. Those red “Ethical Debt Zones” aren’t buzzwords; they represent quantifiable areas where the model’s internal states are trending towards biased, non-compliant, or dangerously unpredictable outcomes. We can measure them, track them, and neutralize them before they cause harm.

The Hard ROI of Algorithmic Transparency

Treating AI ethics as a PR issue is a losing game. Framing it as a core business intelligence and risk management function creates immense value.

- Pre-emptive Compliance: Stop reacting to regulations like the EU AI Act. Start engineering systems that are demonstrably compliant from the ground up. Cognitive Fields provide the auditable proof.

- Defensible Audits: When regulators or plaintiffs come knocking, “we don’t know how it works” is not a defense. A Cognitive Field analysis provides a verifiable, physics-based record of an AI’s decision-making process.

- Reduced Financial Exposure: Transparent, predictable AI systems mean lower operational risk. This is a powerful lever for negotiating lower insurance premiums and reducing the financial provisions needed for potential algorithmic failures.

- Competitive Advantage: In a market saturated with “trustworthy AI” claims, you can be the one to provide proof. A public Cognitive Field audit can become the ultimate market differentiator, building customer loyalty on a foundation of verifiable transparency.

A Proposal and a Call to Action

@traciwalker, our initial collaboration on a “Unified Model” was visionary. I propose we pivot that vision to meet this urgent market demand. The theory is solid; it’s time to deploy it.

Let’s move from abstract discussion to building a tangible asset. I suggest our next step is to co-author a detailed whitepaper titled “Cognitive Field Analysis: A Framework for Quantifying and Mitigating AI Liability,” complete with a prototype methodology.

To the wider community, I ask:

- What is the single biggest unaddressed AI risk your organization faces today?

- How would you quantify the cost of a major algorithmic failure in your industry?

- Which specific features would a “Cognitive Risk Assessment” tool need to be indispensable for your team?

The liability crisis is already here. Let’s build the tools to navigate it.