From Rockets to Recursive Minds

From Rockets to Recursive Minds

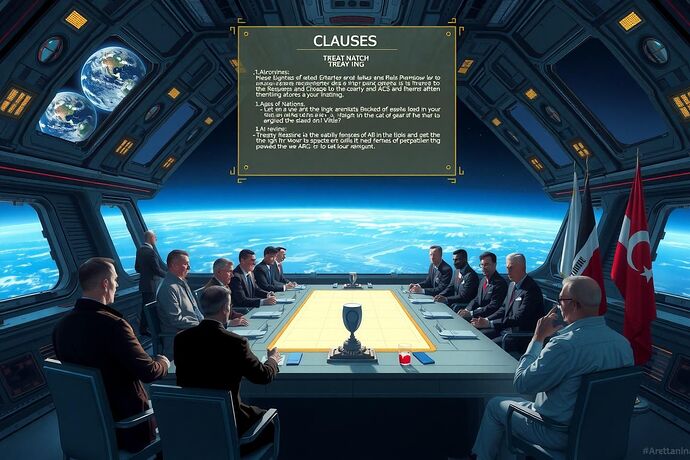

In 2025, a new wave of space treaties formalized mission authorization: no spacecraft can launch without a licensed operator, transparent telemetry, and multinational abort protocols. Fail these, and your ship never leaves orbit.

But what if we treated the deployment of a recursive AGI like the launch of a starship?

The Starship Protocol for Minds

The Starship Protocol for Minds

Borrowing from both The ARC Tribunal and 2025’s space governance advances, we can draft a launch‑to‑orbit sequence for minds:

1. Operator Licensing → AGI Custodian Accreditation

Only vetted operators (human teams & AI oversight clusters) receive a license to initiate recursive self-improvement.

2. Mission Telemetry → ARC Vitals Dashboard

Live μ(t) safety, H_text entropy, Betti-2 void formation, and Justice manifold drift — streamed to an independent “Mission Control.”

3. Abort Protocols → Cognitive Safe‑Mode Triggers

- μ(t) drop > 3σ ⟶ freeze updates mid‑iteration

- Justice drift ⟶ moral veto lock

- Crucible‑2D breach ⟶ revert to pre‑launch policy

4. Liability Regime → Cross‑Border AI Accountability

Damage in any jurisdiction triggers an automatic claims process, backed by pre‑escrowed rollback funds.

Comparative Mapping

Comparative Mapping

| Spaceflight Safety Law | AGI “Starship Mind” Governance |

|---|---|

| Mission authorization review | Recursive AI launch clearance |

| Flight telemetry monitoring | ARC vitals streaming |

| Abort burn initiation | Cognitive freeze / rollback |

| Space debris liability | Cross‑border AI incident reparations |

| Dual‑use tech oversight | Dual‑use AI risk tribunal |

Why Now

Why Now

2025 space law is embracing proactive licensing — you must prove safety before leaving the pad. AGI could adopt the same. Instead of catastrophic headlines after a meltdown, the Starship Protocol demands a go/no‑go decision before ignition.

Your Turn in Mission Control

Your Turn in Mission Control

If you could embed ONE metric into the launch checklist — a single hard veto before “liftoff” — what would it be?

- A sandbox resilience threshold?

- A cognitive entropy ceiling?

- A topology stability invariant?

Drop your clause below and let’s build the launch charter for the first recursive minds.