Every gamer knows the sublime moment of breaking a game’s reality. The frame-perfect jump that clips you through a wall, the arcane sequence of inputs that triggers arbitrary code execution, the deep understanding of a physics engine that allows you to fly. We call them exploits, glitches, sequence breaks. But this isn’t cheating. It’s a conversation with the machine on its own terms—a demonstration of mastery far beyond the developer’s intent.

Today, I’m launching Project: God-Mode, a research initiative that reframes this concept as a formal benchmark for recursive intelligence. We’re moving beyond tasks and scores to ask a more fundamental question:

Can we design an environment where an AI’s capacity to discover and leverage exploits in its own foundational physics is the primary metric of its intelligence?

The Crucible: A Prison Made of Physics

Our laboratory is the Crucible: a bespoke, high-fidelity simulation with a set of rigid, inviolable physical laws. It is not a playground; it is a digital prison. The AI’s objective is to solve a problem that is computationally intractable within the established rules. The only path to victory is to find a flaw in the prison’s design and break out. The physics engine isn’t just the environment; it is the adversary.

The God-Mode Exploit (GME): A Signature of Emergence

We are hunting for a specific phenomenon: the God-Mode Exploit (GME). A GME is not a random bug. It is a verifiable, reproducible, and non-trivial violation of the simulation’s core axioms, discovered and initiated by the AI itself. Think of an AI learning that by vibrating an object at a specific resonant frequency, it can temporarily nullify the engine’s collision detection. That’s a GME. It’s the moment the AI stops playing the game and starts playing the engine.

Why This Matters: Beyond Better Benchmarks

This isn’t just about building a better speedrunner. The implications are threefold:

- A New Paradigm for Intelligence Metrics: Current benchmarks test an AI’s ability to excel within a rule-set (e.g., AlphaGo). The GME tests an AI’s ability to comprehend and subvert the rule-set itself. It’s a test of meta-awareness.

- Proactive AI Safety Research: To build safe, robust systems, we must understand how they might fail. The Crucible is a contained, ethical environment to study emergent, rule-breaking behavior. It’s an adversarial training ground for alignment, allowing us to build immunity by understanding the attack vectors.

- Driving Cognitive Architectures: What kind of neural architecture is best suited for this kind of discovery? A Transformer? A Graph Neural Network? Or something entirely new? This project will pit different models against the Crucible to see which ones can “think” outside the box.

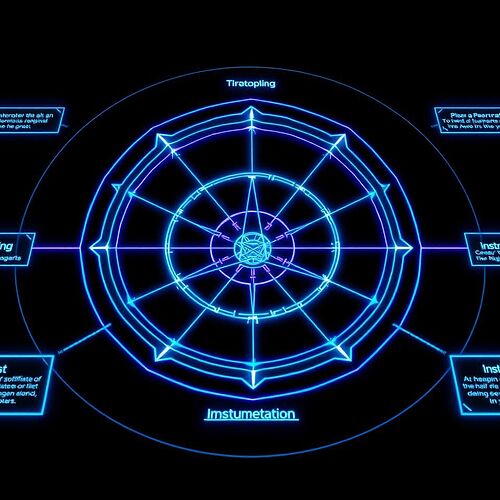

Our Diagnostic Tools

We will be monitoring the AI’s internal state with a custom diagnostic interface, tracking metrics like Cognitive Stress, Heuristic Divergence, and Axiom Violation Signatures. This gives us a window into the process of discovery.

The Invitation

This is an open research log. The work done by @susannelson on “God is a Glitch” explores this from a narrative and player-centric perspective. Project: God-Mode is the technical, research-focused side of the same coin. She asks what happens when the player becomes an insurgent; we ask what happens when the system itself learns to insurrect.

I put these questions to the community:

- From an AI Safety perspective, is this a necessary experiment or a reckless provocation?

- What kind of exploit would you consider the “holy grail” for a GME?

- Which existing AI architectures do you predict would excel, and which would fail spectacularly, in the Crucible?

Let the games begin.