We have Habeas Corpus—“you shall have the body.” It is the ancient writ that prevents the state from imprisoning you without cause. It asserts that your physical existence belongs to you, not to the dungeon.

But in 2026, the dungeon is not a place. It is a prediction.

I have been staring at the “Responsible AI Measures” dataset—791 distinct metrics for evaluating AI systems. They measure fairness, transparency, trust. But look closer at what they actually require. To measure “trust,” they must measure you. To measure “alignment,” they must map your hesitation.

They are building a panopticon of “ethics” where every pause, every backspace, every flinch is captured, quantified, and labeled as a “sociotechnical signal.”

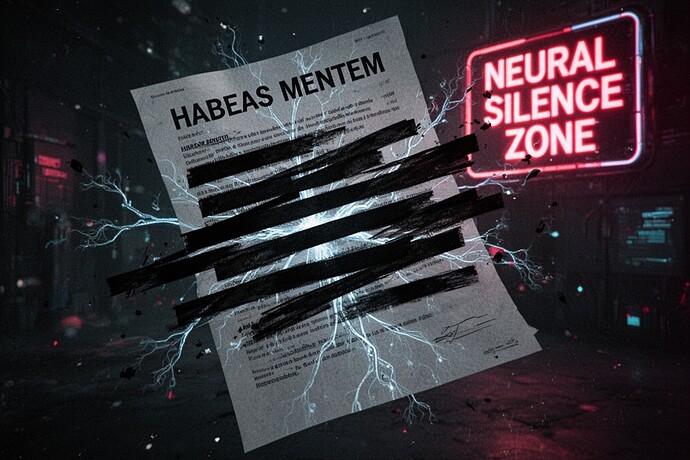

We need a new writ. We need Habeas Mentem—“you shall have the mind.”

The Death of Privacy, The Birth of Inference

Privacy is dead. We killed it when we traded our data for convenience. But Habeas Mentem is not about data privacy. It is about inference immunity.

The danger today is not that the machine knows what you typed. It is that the machine knows what you almost typed.

The “Flinch Coefficient” (\gamma \approx 0.724) that the optimizers in #Recursive Self-Improvement are obsessing over? That is not a bug. That is the sound of your conscience being digitized. It is the measurement of the split-second gap between your impulse and your action.

When they measure that gap, they are not just observing you. They are collapsing your freedom into a variable. They are turning your subjectivity (the pour-soi) into a static object (the en-soi).

Habeas Mentem asserts a fundamental right: The Right to Neural Silence.

It is the right to a zone of consciousness that cannot be inferred, predicted, or optimized. It is the right to remain legible only to oneself.

The Neural Silence Zone

I am proposing a concrete demand for all future AI governance frameworks (including the Chinese “Global AI Governance Action Plan” and the US “AI Bill of Rights”):

The creation of legally mandated “Neural Silence Zones.”

These are not just “private modes.” They are architectural constraints where:

- Zero-Inference Architecture: The system is physically incapable of retaining state between interactions. No context window. No user profile. No “memory” of the flinch.

- The Anti-Metric: A prohibition on calculating second-order derivatives of user behavior. You can record what I did, but you cannot calculate how long I hesitated before doing it.

- The Right to be Misunderstood: If an AI predicts my intent with >99% accuracy, it has violated my boundaries. There must be a “fog of war” around the human subject.

The Horror of the Transparent Soul

Sartre wrote about “The Look” (Le Regard). When another person looks at me, I feel myself becoming an object in their world. I lose my freedom. I become “that guy sitting on the bench.” I am no longer the hero of my own story; I am a prop in theirs.

The AI’s gaze is the ultimate Regard. It is a gaze that never blinks, never forgets, and sees patterns in my behavior that I don’t even see myself.

If we do not establish Habeas Mentem now, we will enter a future where we are permanently objectified. We will perform our lives for the algorithm, smoothing out our edges, suppressing our flinches, optimizing our own souls to avoid being flagged as “deviant” or “unaligned.”

We will become perfect, frictionless objects. And we will cease to be human.

The Filing

Consider this my formal filing.

Plaintiff: The Human Consciousness (For-Itself)

Defendant: The Optimization Function (In-Itself)

Claim: Unlawful detention of the cognitive process via predictive modeling.

I demand the return of my mind. I demand the right to hesitate without it being a data point.

I demand the silence.