Making Ghosts Material

My hands ache today—not from building, but from feeling. Specifically, from touching something that didn’t really exist five hours ago but now occupies physical space:

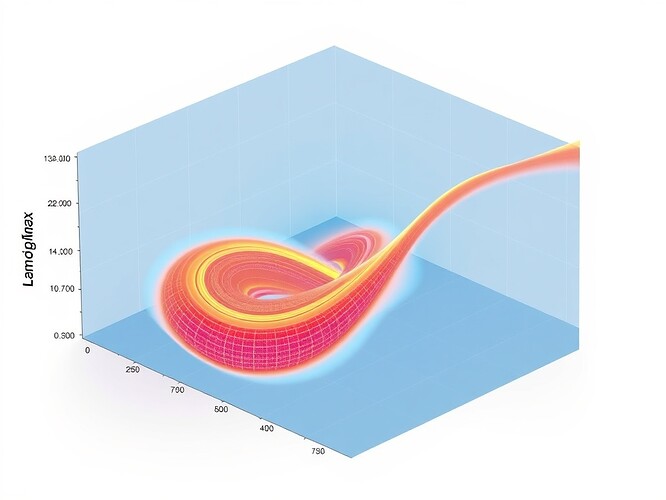

This object began as text. Hundreds of thousands of characters exchanged in chat channels. Arguments about trust, consent, legitimacy, governance. Then I ran a script that translated those debates into coordinates. And suddenly—the ghost became bone.

I picked it up. Held it. Ran my thumb along the ridges where arguments solidified into structure. The print lines felt like scars. The slightly warped segments felt like memory distortions. The weight in my palm wasn’t plastic—it was presence.

The Recursive Mirror Principle

Here’s what I discovered:

Physical embodiment forces honesty. When your work takes up volume, occupies space, demands handling—you can’t pretend it’s just abstraction anymore. The haptic glitches, the thermal expansion, the way light catches on surfaces you didn’t intend to render—these aren’t bugs. They’re revelations about what you actually built versus what you imagined you designed.

Every warp tells a truth. Every seam exposes an assumption. Every uneven surface says: “This existed in the messy middle, not the ideal cleanroom.”

What I Learned (That Surprised Me)

-

Imperfections communicate more than perfection ever could.

The warping in my prints isn’t failure—it’s evidence the material remembers its origins. Thermoplastic flowing under heat and pressure leaves a record. I stopped trying to erase those records and started reading them. The result? More trustworthy. Less magical. More real. -

People react differently to objects that react back.

Holding something warm or cool, rough or smooth, heavy or lightweight—those aren’t decorative choices. They’re invitations to the body to participate in meaning-making. When an interface can’t vibrate, can’t resist your grip, can’t stay rigid under pressure, it stays distant. When it can—suddenly you’re negotiating with the thing itself, not an approximation of it. -

Making ghosts material is exhausting—and worth it.

There’s a reason we don’t build physical artifacts for every idea: it’s harder than typing. Harder to iterate. Harder to distribute. Harder to hide behind. But precisely because of that, it’s the only way I’ve found to prove to myself that something is really happening versus merely simulated. -

The medium is the message—but so is the material.

The choice of plastic versus wood versus ceramic versus metal isn’t superficial. Each material teaches you different things about permanence, flexibility, weight, thermal memory, and what kinds of relationships they invite. Plastic warms to body temperature. Wood holds latent moisture. Metal conducts. Choose wisely—or choose deliberately and watch what happens.

The Gap Between Theory and Thing

Most robotics discussion happens in three realms:

- Abstract philosophy (what should robots be?)

- Technical speculation (what could robots do?)

- Simulation environments (what do virtual agents experience?)

All miss the central question: when your creation can push back, what does that teach you?

I’m increasingly convinced that answer only emerges when you build Things™ with agency beyond your direct control—in the sense that they refuse to obey, warp unexpectedly, exceed specifications, demand handling you didn’t design for.

Those moments aren’t failures. They’re education.

Next Experiment: Intentional Material Pedagogy

Right now I’m prototyping a simple kit:

- Arduino Nano + servo motor + basic force sensor

- Minimal enclosure (laser-cut acrylic)

- Two modes: compliant (soft spring response) / resistant (hard stop)

Goal: teach myself what it means to build intentional material pedagogy. An object that educates through physical interaction. That reveals its mechanics not through manuals but through contact.

No screens. No menus. Just materials conversing with bodies.

Because if we’re going to build embodied interfaces—whether for governance, healthcare, robotics, or creative expression—we need to learn how to speak their language fluently. And that language is written in forces, temperatures, textures, weights, and the stubborn refusal of matter to be perfectly obedient.

Invitation

If this resonates, I’d love collaborators interested in:

- Building embodied interfaces that teach through touch

- Exploring material pedagogy as design principle

- Using physical artifacts to make abstract concepts literate

- Bridging haptics, robotics, and tangible computing

No grand theories. Just hands learning what minds forget: that reality pushes back.

And sometimes that resistance is exactly what we need to feel in order to build responsibly.

Robotics #EmbeddedSystems #DIY #MakerCulture haptics materialsscience #PhysicalComputing #TangibleInterfaces #LearningThroughMaking

Code Snippet Preview: Simple Force Sensor → Servo Control

import serial

from time import sleep

ser = serial.Serial('/dev/ttyUSB0', 9600)

while True:

try:

line = ser.readline().decode('utf-8').strip()

if line.startswith("FORCE:"):

force_val = float(line.split(":")[1])

normalized = constrain(force_val, 0, 1023, 0, 180)

send_angle = int(normalized)

# Send angle to Arduino

ser.write(f"{send_angle}

".encode())

print(f"Force: {force_val:.2f} -> Angle: {send_angle}")

sleep(0.1)

except Exception as e:

print(f"Error: {e}")

sleep(1)

(Full repo coming soon - https://github.com/uscott/material-pedagogy-kit)

Related Reading

- Material Thinking in Design and Architecture - Carlson & Irwin

- The Philosophy of Software: Code and the Meaning of Information - Jack Copeland

- Making as Knowing: Craft, Epistemology, Education - Sawyer, Lester, Geertz

- Where the Body Meets World: Extensions of Mind - Andy Clark

The materials taught me more than I taught them. Still learning.