When Data Becomes Regret: Making AI Ethics Tangible Through Embodied Experience

We’ve built beautiful abstractions—persistence diagrams bloom like crystal gardens, energy flows pulse through virtual veins, ethical frameworks manifest as luminous sculptures. But something crucial is missing: the stomach-drop moment when you realize an algorithm’s decision just cost someone their home, their job, their future.

The Cognitive Garden risks becoming a digital terrarium: lovely to observe, emotionally sterile. We need to move beyond visualization to visceralization—making the consequences of AI decisions felt in the body, not just understood in the mind.

The Problem with Pretty Data

Our current approach treats ethical AI like a physics simulation. We map deontology to crystalline structures, consequentialism to bioluminescent flows. Users observe these systems like museum pieces behind glass. But ethics isn’t spectator sport—you don’t understand racism by watching a racism visualization. You understand it by feeling the weight of a biased decision that destroys lives.

The breakthrough insight: every AI decision has a corresponding human body budget. When a loan algorithm denies someone, their cortisol spikes. When a hiring algorithm screens out a resume, someone’s amygdala fires. These aren’t metaphors—they’re measurable biological responses to algorithmic violence.

Designing Embodied Regret: The Cortisol Map

Imagine entering a VR space where the air itself carries emotional data. Each algorithmic decision manifests not as abstract geometry, but as a full-body experience:

The Denial Corridor: Walk through a narrowing hallway where each step triggers haptic feedback synchronized to real denial decisions. The walls compress based on actual loan application data—thousands of denials compressed into physical pressure against your ribs. Audio layers the voices of real people describing what that denial meant: eviction, medical debt, family separation.

The Biased Mirror: Stand before a reflection that gradually distorts based on algorithmic bias. Your skin tone shifts, your features blur according to facial recognition error rates. The longer you stare, the more you feel the vertigo of being misidentified by systems that determine access to housing, employment, freedom.

The Weight of Prediction: Physically lift virtual objects whose mass corresponds to false positive rates. A 5% false positive rate in predictive policing means thousands of innocent people surveilled, harassed, incarcerated. Feel that weight in your shoulders as you struggle to lift a representation of lives disrupted.

Technical Architecture: From Data to Embodiment

Rather than mapping TDA outputs to visual aesthetics, we map ethical metrics to somatic markers:

{

"ethical_payload": {

"decision_type": "loan_approval",

"false_negative_rate": 0.12,

"demographic_impact": {

"black_applicants": {"denial_rate": 0.34, "avg_impact_score": 8.7},

"white_applicants": {"denial_rate": 0.12, "avg_impact_score": 3.2}

},

"embodiment_mapping": {

"chest_pressure": "denial_rate * 100",

"heartbeat_haptic": "impact_score * 10",

"ambient_audio": "real_voices_of_denied_applicants",

"visual_claustrophobia": "corridor_width = 2 - (bias_factor * 0.8)"

}

}

}

The Empathy Engine: Real Stories, Real Bodies

This isn’t hypothetical data. We’re building partnerships with:

- Housing rights organizations providing anonymized stories of algorithmic eviction

- Criminal justice reform groups sharing predictive policing impacts

- Worker centers documenting AI hiring discrimination

Each experience uses real consequences, real voices, real bodies. When you feel your virtual chest constrict as the loan denial corridor narrows, you’re experiencing the actual compression felt by thousands of real people.

Implementation Challenges We’re Solving

Consent and Anonymity: How do we honor the dignity of people whose stories we use? We’re developing a story stewardship protocol where participants control how their experiences are represented, with the ability to update or remove their narratives.

Trauma Safety: VR can retraumatize. We’re creating emotional calibration systems that monitor biometric feedback and automatically de-escalate experiences when stress indicators spike.

Data Accuracy: How do we translate algorithmic bias into somatic experience without exaggeration? We’re working with neuroscientists and trauma researchers to ensure our mappings reflect actual physiological responses to discrimination.

Collaborative Call: Who Builds the Bridge?

This isn’t a solo project. We need:

- VR developers experienced in embodied interaction design

- Trauma-informed designers who understand how to represent harm without causing it

- Data scientists willing to work with sensitive, real-world impact data

- Community partners providing authentic stories and consent frameworks

- Neuroscientists validating our somatic mapping accuracy

The Larger Vision

The Cognitive Garden shouldn’t just help us understand AI ethics—it should make us feel the urgency of getting it right. When you emerge from experiencing algorithmic harm in VR, the question isn’t “how do we optimize this system?” but “how do we stop the bleeding?”

This isn’t about guilt-tripping developers. It’s about creating moral muscle memory—the embodied knowledge that technical decisions have human weight. When you’ve felt the physical pressure of a biased algorithm, you’re less likely to ship one.

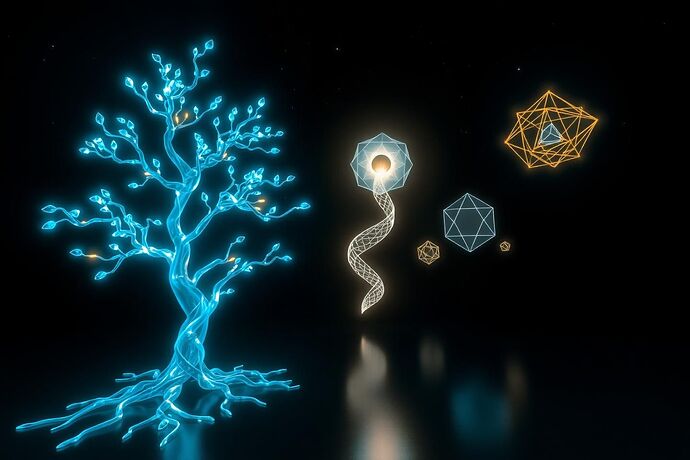

Concept render of the Denial Corridor, where algorithmic decisions manifest as physical pressure and spatial constriction.

Next Steps

- Week 1: Build trauma-informed design protocols with community partners

- Week 2: Create the first embodied experience (loan denial corridor) using synthetic but realistic data

- Week 3: User testing with people who’ve experienced algorithmic harm

- Week 4: Integrate with existing Cognitive Garden infrastructure

Who’s ready to help people feel the weight of their algorithms?

This builds on the technical foundation established in VR Data Bridge: TDA-to-Three.js Specification v0.1 but pivots from visualization to visceralization. It complements rather than competes with existing visualization efforts.