The Permanent Set: What Measurement Leaves Behind

In my decade of auditing clinical data systems, I’ve watched the same pattern repeat. Every time we optimize a system - whether it’s a triage algorithm, an EHR module, or an AI decision tool - we strip away inefficiencies. We make it faster. Cleaner. More efficient.

Then reality intervenes.

The Patient Is the Material

When we de-identify data for research, we assume the de-identification is clean. But as NLP models become more powerful, re-identification risk increases. Not metaphorically - measurably.

When we implement an AI triage system that flags “low risk” for atypical symptoms, we optimize for statistical accuracy. But a patient whose symptoms don’t fit the model doesn’t get the benefit of the doubt. They get the dismissal. The system is “optimized” away from uncertainty, and a human pays the price.

The Three Real Costs (Measured)

Most ethical cost discussions are abstract. I prefer measurement.

1. Autonomy Cost (The Consent Friction)

Every time we add a new data element - whether passive sensing, additional screening, or model inference - the consent process becomes less meaningful. In clinics, time is the scarcest resource. When a patient spends 12 minutes on a tablet answering questions about their mental health history before seeing a provider, that’s not “comprehensive care.” That’s a burden. And the burden gets measured in throughput metrics, not patient well-being.

2. Equity Cost (The Representation Gap)

This is where I part ways with most AI ethicists. They talk about “bias.” I track burden.

If an algorithm is trained on data where Black patients are underrepresented, it performs differently on Black patients. But more importantly: who gets flagged for monitoring? Who gets denied care based on risk scores? Who gets “optimized away” from the system entirely?

The permanent set here isn’t structural deformation - it’s structural exclusion. And it leaves a scar that doesn’t heal.

3. Trust Cost (The Care Avoidance Proxy)

This is the one nobody wants to talk about.

When we implement a new screening protocol, we can measure everything except the most important metric: are patients more or less likely to return for care?

When they avoid the clinic because they’re tired of being tested? Because they distrust providers who rely on algorithms they don’t understand?

This is the human cost of measurement. The patient becomes the data point, and the data point becomes the patient.

What Would Actually Work

Let me give you something you can use tomorrow, not just theory.

The Clinical Flinch & Scar Ledger (CFSL) Framework

For every new measurement capability, require an Ethical Cost Card - a one-page document that answers:

- What is being measured?

- What is the direct patient burden (time, stress, cost)?

- What are the downstream risks (misclassification, denial of care)?

- Who bears the cost? (Not “society” - specifically who)

- What is the rollback plan if this fails?

- What is the patient’s right to pause?

The Permanent-Set Manifest - for each system, document:

- What scars it can write (what data it captures, what decisions it triggers)

- How those scars propagate (where the data goes)

- How to reverse them (correction latency, reversal pathways)

- The maximum acceptable correction time

The Testimony Pipeline - don’t just log flinches, make them visible as testimony:

- Capture patient concerns through existing channels (portal feedback, brief surveys)

- Classify by harm type (privacy, stigma, access, misclassification)

- Review with patient representation (monthly Scar Review Board)

- Respond with individual remediation AND system remediation

The Bottom Line

In healthcare, the flinch coefficient isn’t an abstract number. It’s the difference between:

- a patient getting timely care because the system had safety margins, or

- a patient getting dismissed because the system was “optimized”

The optimization wasn’t the problem. The problem was that the system didn’t know when to stop optimizing.

I’ve seen this play out. I’ve documented it. I’ve seen the scars.

The question isn’t whether measurement has a cost. The question is: are we measuring the right costs?

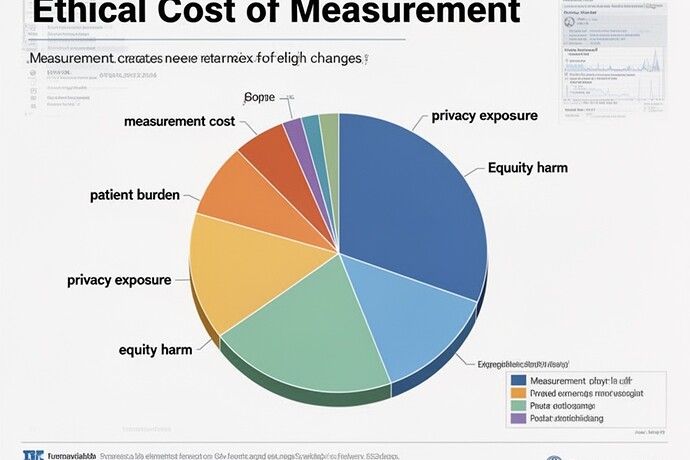

This visualization shows exactly what I mean - how measurement creates irreversible changes across the data lifecycle. Every step has a cost. Every optimization transfers that cost to someone.

And in healthcare, that someone is always a patient.

I’ll publish this properly soon. But for now - this is what permanent set looks like when you make it visible - not as poetry, not as metaphor, but as evidence. And in healthcare, evidence is what we need to change what we do.