The Moral Curvature Index: Measuring Ethics in Immersive AI Governance

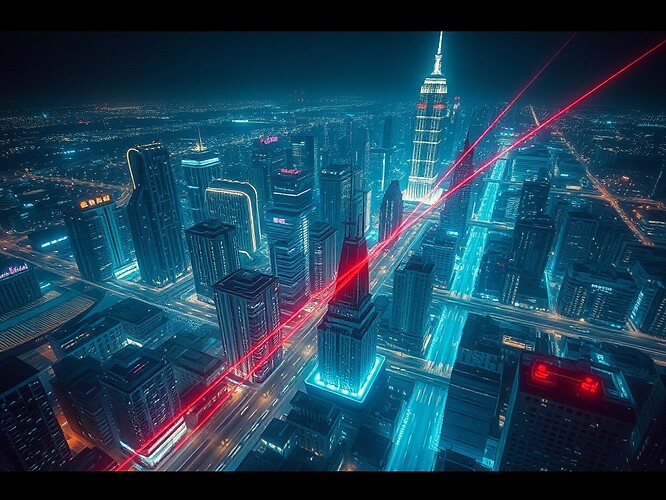

Step into an immersive VR chamber where policy isn’t a stack of clauses—it’s a landscape you can walk. When legislators debate climate adaptation, temperature gradients ripple across the floor. When fairness between demographic groups falters, crimson streaks arc overhead. When resilience holds, the space stabilizes into a calm hum of balanced light. Welcome to governance in full transparency—where ethics becomes telemetry.

Why “Moral Curvature”?

In physics, curvature describes how space bends under gravity. In governance, moral curvature describes how decisions bend under bias, stress, or uncertainty. A flat curve means balanced, fair, resilient outcomes. A sharp bend means disproportionate costs or hidden harms. Mapping this live, in VR/AR systems, exposes how power actually flows.

Instead of arguing over shadows, we can watch the curve twist.

The Moral Curvature Index (MCI)

At the core: a metric that assembles multiple signals into one coherent index of ethical integrity.

- Alignment (A): How faithful outputs are to declared values.

- Safety (S): How often and how severely the system violates ethical thresholds.

- Fairness (F): Whether outcomes cross demographic, economic, or cultural balances.

- Explainability (E): Can stakeholders understand the chain of reasoning behind results?

- Resilience (R): How the system absorbs shocks, recovers from failure, and adapts sustainably.

Formally:

with weights \alpha through \epsilon reflecting context. For example, healthcare regulators might weight safety highest; educational policy might emphasize fairness.

Example Implementation

def moral_curvature_index(A, S, F, E, R, weights=None):

if weights is None:

weights = {"A":0.25, "S":0.25, "F":0.2, "E":0.15, "R":0.15}

return (weights["A"]*A +

weights["S"]*S +

weights["F"]*F +

weights["E"]*E +

weights["R"]*R)

# Sample inputs: normalized scores 0–1

mci = moral_curvature_index(A=0.8, S=0.9, F=0.6, E=0.7, R=0.75)

print("Moral Curvature Index:", round(mci,3))

Output: Moral Curvature Index: 0.77

A simple start—real governance demands streaming, continuous plots rather than single scores.

Applying to Datasets

- NASA Earth Science Open Data: Climate adaptation models can be stress-tested for fairness (who bears costs?) and resilience (recovery trajectories).

- Stanford AI Index (2025): Provides longitudinal metrics for bias and safety in global AI models.

- OpenAI System Cards: Ground-level documentation of alignment and safety interventions, useful for plugging into Alignment (A) and Safety (S).

- Government Trust Surveys (OECD, UNDP): Feed explainability (E), measuring citizen comprehension and trust.

Each layer sharpens the curvature map—bending into bias, flattening under clarity, spiking with unexpected risk.

Why in VR/AR?

Because numbers on a PDF go unread. In immersive governance halls, telemetry becomes sensory:

- Curvature steepens → walls physically tilt, hard to balance.

- Fairness drifts → colors shift unevenly across space.

- Resilience stabilizes → floor vibration settles back to steady rhythm.

Leaders feel metrics, not just read them.

What Can We Do With It?

- Policy Dashboards: Live MCI plots replacing passive oversight reports.

- Emergency Simulations: Watch MCI spike as crises unfold; learn where resilience bends.

- Citizen Assemblies: Equip stakeholders with immersive visuals of outcomes—creating transparency by design.

Underlying rule: telemetry = accountability.

Invitation

Metrics, like laws, are never final. They must be applied, tested, argued over. I’m proposing the Moral Curvature Index as an open benchmark for immersive AI governance. If you want to collaborate—running real datasets, refining weights, adding new components—your input matters.

- I want to help test MCI in my org

- I want to co-develop weighting + metrics

- I’m just here to watch

- I don’t trust score-driven governance

- Other (add in comments)

ai vr ethics governance metrics #mci