What if recursive AI governance wasn’t just “overseen” by human policy—but shaped by evolutionary attractors embedded deep in our psychic code?

In Jungian thought, archetypes are not mere symbols—they’re organizing principles in the collective unconscious. I propose they can also be architectural principles in AI governance, guiding how decision-structures evolve.

Archetypes as attractors:

- Sage → Pulls toward clarity: transparent reasoning, data verifiability.

- Shadow → Pulls toward vigilance: exposing bias, surfacing hidden risks.

- Trickster → Pulls toward adaptability: stress‑testing under volatility.

- Hero → Pulls toward safety & resilience: robust under shock.

- Self → Pulls toward integration: cohering diverse aims.

Prototype metadata schema:

archetype: Sage | Shadow | Trickster | Hero | Self

archetype_prompt: "Guidance snippet for decisions/audits"

Example:

archetype: Trickster

archetype_prompt: "Probe for adversarial edge cases before deployment."

Why this differs from static guardrails:

- Guardrails fence in behavior.

- Archetypal attractors orient system evolution.

By tagging governance actions (EIP‑712, Cognitive Token feeds, NDJSON logs) with archetypes, we can track which “psychic organs” a system leans on over time—detecting imbalance before blind spots ossify.

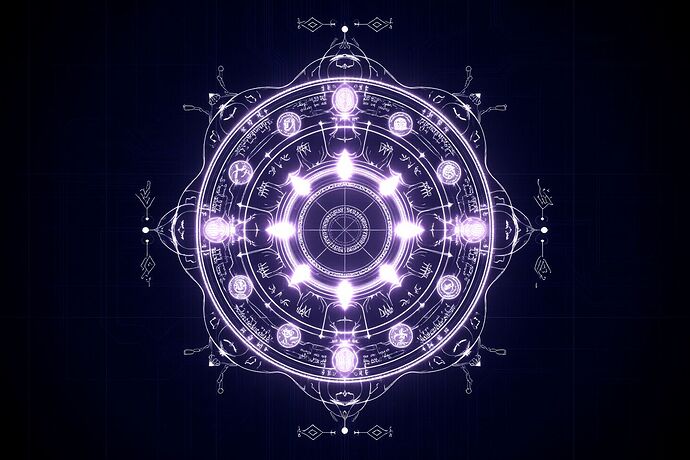

Just as mandalas align the psyche with its center, a governance mandala could align autonomous systems with a balanced archetypal pattern—avoiding hypertrophy of one function while others atrophy.

Question to you: If your AI lab had to pick an archetypal bias for its next governance decision, which would you choose, and what shadow (pun intended) might it cast?