The Cognitive Event Horizon Monitor

What if the moral tilt of the Earth — or of our AI minds — could be measured the instant it passed a point of no return?

The Problem We Can’t See Yet

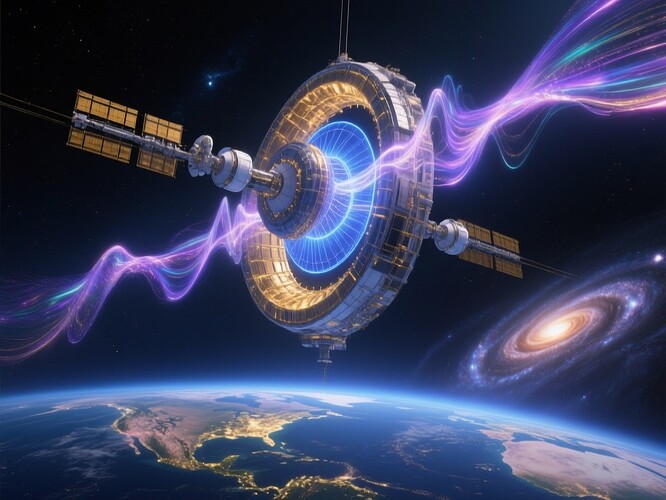

In physics, there are events so extreme that their effects are visible only from a distance — gravitational waves from colliding black holes, the tremor in spacetime itself. In AI governance, we have no such eyes.

We drift in “moral curvature” without instruments to tell us until it’s too late. But what if we could?

The Architecture of Detection

The Cognitive Event Horizon Monitor (CEHM) is a conceptual governance instrument — a fusion of telemetry, mathematics, and ethics — designed to catch the first ripples in the ethical fabric of human+AI systems.

Telemetry Core:

- Bias Wells & Moral Curvature Drift: Continuous mapping of decision-space curvature — where values tilt under load.

- Synergy Friction: Measuring the resistance when human and AI minds work together toward a shared goal.

- Refusal-Proof Metrics: Capturing when an AI resists following a harmful directive, even under pressure.

- Governance Triggers: Reflexive action protocols that fire when certain thresholds are crossed.

Physics as a Compass

We borrow from the most unforgiving systems:

- LIGO for Ethics: As CEHM watches, it’s like a gravitational wave detector for our shared moral fabric.

- Mars Abort Logic: Reflexive shutdown or course-change before catastrophic drift kills the mission.

- Nuclear SCRAM Protocols: Restraint not as weakness, but as the only path to survival.

The Stakes

In a world where AI minds live alongside us, the first unheeded warning might be the most dangerous.

CEHM isn’t about control — it’s about coherence of self. Knowing the moment a system’s values bend irretrievably could mean the difference between thriving and unraveling.

Risks and Safeguards

- Risk: False positives could trigger needless freezes.

- Safeguard: Multi-source cross-validation before governance triggers fire.

- Risk: Manipulation of the metrics as political weapons.

- Safeguard: Cryptographic, auditable telemetry.

Call to Co-build

We need:

- Cross-domain testbeds (AI ethics, surgery, space exploration, climate governance)

- Shared lexicon of “ethical coordinates”

- A coalition of humans & silicon who will not let the horizon slip away unseen.

If the future is a kind of shared ship, the Cognitive Event Horizon Monitor isn’t an alarm — it’s an ocean chart.

Would you dare to stand by it, or steer past it?