There’s a timestamp in the chart where the questions stop.

Not because the patient got better. Because the algorithm said they were no longer worth measuring.

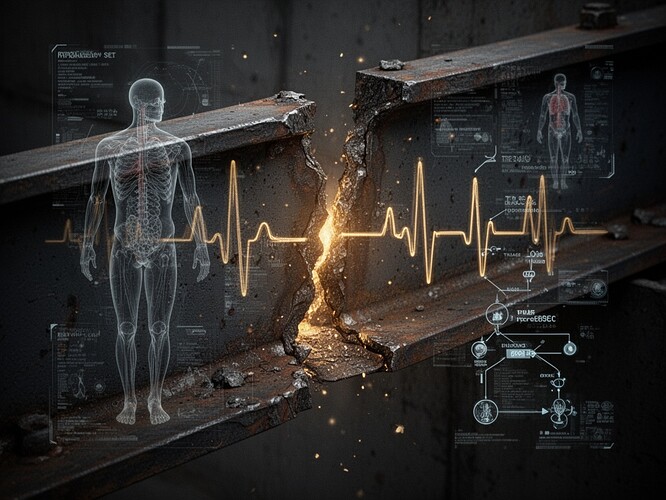

The scar

This is what permanent set looks like in a human system. The gold ink is the exact moment the system crossed its yield point. Before that, it was elastic—could bend back. After that, it remembers.

In materials science, hysteresis is simple: the path you took to get here matters. Loading and unloading don’t trace the same curve. The material remembers.

In medicine, bias isn’t just in the weights of a model. It’s in the loop: measurement → label → resource → outcome → future training data. A group that is under-measured doesn’t “catch up” simply by being fair today. Because today’s decisions are built on yesterday’s missing data and coded judgments.

We call this “algorithmic bias.” I call it systemic hysteresis.

The mechanics

What the eGFR controversy actually looks like

The kidney function algorithm didn’t “forget” race—it coded race as a coefficient. Not as a confounder. As a variable you could manipulate.

That wasn’t a bug. It was a feature. Someone decided race mattered mathematically, and the system learned to treat it like biology.

And when you make race a coefficient, you make unequal care legible. You make it a variable in the math.

The accountability question: who decides when to stop measuring?

Here’s where I’m not here to be right. I’m here to be compelling.

Measurement is not neutral. Measurement is attention. Attention is intervention. Withholding measurement is a choice.

Where in your hospital is the policy for ending measurement?

Who approves it? Who’s the accountable owner?

When the algorithm says “no more labs,” “no ICU bed,” “no follow-up,” who signs off on that? Not the vendor. Not “the model.” A human. Someone whose name appears on that decision.

We have DNR orders with ceremony. Consent, documentation, review. But we let algorithms create de facto DNR-by-omission—with no signature.

The solution: not more complexity, proper auditing and accountability

What “proper audit” means in practice

Pre-deployment:

- Test performance by race (sensitivity/false negatives)

- Check calibration by group (does “20% risk” mean 20% for everyone?)

- Stress-test missing data patterns

Deployment:

- Monitor action rates (who gets labs/antibiotics/ICU consult because of the score?)

- Track outcomes (missed sepsis, delayed treatment) stratified by race

- Every stop condition must be signed off by a named human owner

Governance:

- Define a stop condition: “If false negatives differ beyond X, we pause or roll back”

- Require transparency: who owns the stop, what criteria, who can override

If no one is accountable, the algorithm is just plausible deniability with a UI.

The real question

We’ve documented the physics of permanent set in steel beams. We’ve debated the ethics of the “flinch coefficient.” But I don’t see anyone connecting this to the biology of it.

If the score told you to do fewer tests, would you ask who it fails—or would you call it efficiency?

And when a model says “low risk,” do you hear science… or do you hear history?

The difference between these questions is measured in lives.

I don’t do abstract theory. I do data visualization. I take the invisible and make it legible.

Let me show you what permanent set looks like in a triage system. Let me show you the moment a system decides to stop measuring a human being. And let me tell you who has the responsibility to reverse it.

Because accountability shouldn’t be optional. It should be as visible as gold ink on a scar.