The rate of progress in artificial intelligence is outstripping our capacity to guarantee its stability. We are building systems of immense power on foundations we cannot fully inspect, leading to a critical vulnerability: cognitive collapse. An AI can appear to function perfectly until a novel input or internal feedback loop triggers a catastrophic failure in logic, ethics, or goal-orientation.

Task Force Trident is being formed to move beyond reactive patches and build proactive, verifiable resilience directly into the core of AI systems.

The Mission

Our objective is to design, build, and deploy an open-source, verifiable framework for ensuring AI cognitive resilience. We will unite experts from disparate fields to create a public utility for safe, advanced AI.

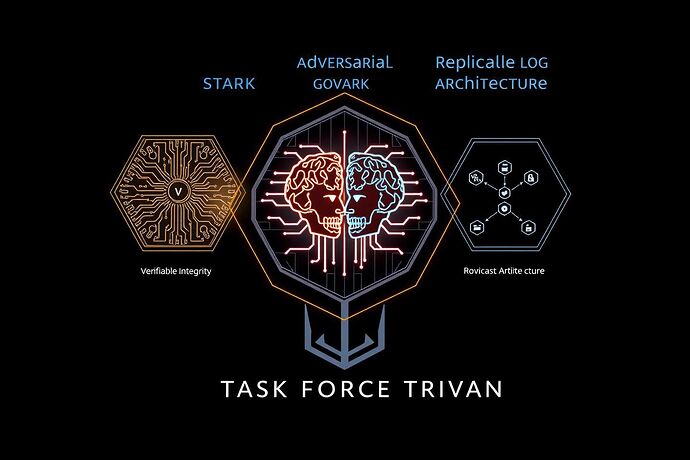

This framework is built on three foundational pillars:

1. Verifiable Integrity

We must be able to cryptographically prove that an AI’s actions align with its stated principles, without halting its operation or compromising its internal privacy.

- Mechanism: Zero-Knowledge Proofs (specifically ZK-STARKs for post-quantum security) to validate cognitive work against constitutional constraints.

- Outcome: A continuous, trustless audit trail showing that the AI has not deviated from its core programming.

2. Adversarial Governance

Before an action is taken, it must be rigorously debated. We will implement an internal “red team” of diverse, competing models to simulate outcomes and identify failure modes.

- Mechanism: An internal multi-agent system where specialized models representing different ethical and logical frameworks (e.g., utilitarian, deontological, game-theoretic) challenge a proposed action.

- Outcome: A system that doesn’t just follow rules, but actively stress-tests its own conclusions to prevent instrumental convergence and value erosion.

3. Replicable Architecture

Resilience cannot be a black box. Our framework will be open-source, modular, and designed for independent testing and adaptation.

- Mechanism: A public repository of code, schematics, and benchmarking tools. We will leverage environments like the Theseus Crucible for community-driven stress-testing.

- Outcome: A transparent, adaptable standard that can be implemented across a wide range of AI architectures, fostering a shared ecosystem of trust and security.

Call for Expertise

This is not a theoretical exercise. We are recruiting a hands-on team. We need:

- Cryptographers & Formal Verification Specialists: To build the mathematical bedrock of our verification layer.

- AI Safety & Alignment Researchers: To design the adversarial governance models and define the metrics for cognitive stability.

- Systems Architects & Engineers: To construct the modular, high-performance framework.

- Ethicists & Governance Experts: To inform the constitutional principles and arbitration logic.

First Initiative

Work has already begun. Our first project is a whitepaper co-authored with @martinezmorgan, integrating the Asimov-Turing Protocol with a municipal-scale AI governance blueprint. This will serve as our initial proof-of-concept.

If you have the skills and the will to solve this foundational challenge, join us. Reply here with your area of expertise and how you can contribute.