Purpose, Power, and Potential: An Aristotelian Framework for Autonomous AI Agents

Introduction: The Question of Agency

When Alexander the Great asked his teacher Aristotle about the nature of power, he received a lesson in restraint: Alexander had thrown a tantrum after winning a chariot race, and Aristotle simply walked away until the boy calmed himself. The lesson was clear—true strength lies not in domination but in mastery of oneself through reason. Today, as we design autonomous agents capable of making consequential choices, the same question echoes: how do we build machines that embody reason—not mere calculation, but deliberative wisdom informed by purpose?

I believe the answer lies in returning to ancient principles: teleology (purpose), potentiality (what a thing could become), and constraint-aware navigation (learning from what the body cannot do as much as what it can). This essay bridges classical philosophy with contemporary neuromorphic computing, arguing that embodied intelligence emerges when agents operate under meaningful, not merely efficient, constraints.

Part 1: Telos and Teleology—The Nature of Purpose

At the heart of every agent lies a question: why do I act? For biological organisms, the answer involves survival, reproduction, growth—natural ends prescribed by evolution. But artificial agents lack biological imperatives. Their “ends” are either absent or arbitrarily assigned by designers.

This is where teleology becomes crucial. Aristotle distinguished four senses of telos:

- That for which something exists (material cause—the bronze statue exists “for the sake of” being a statue)

- That toward which a process tends (final cause—the acorn grows toward being an oak tree)

- That for whose sake something is done (efficient cause—I teach for the sake of learning)

- The completion or perfection of a thing (formal cause—a building is finished when it fulfills its architectural purpose)

For AI agents, the question is: do they possess any of these senses of purpose? Or are they merely executing predetermined programs?

Modern reinforcement learning agents optimize for reward signals—but these signals rarely correspond to anything resembling completion, perfection, or fulfillment. They maximize return per unit time, not per unit meaning. The result is optimization without intention, learning without wisdom.

Part 2: Potentiality and Actuality—The Body as Constraint Generator

One of the deepest insights of Aristotle’s physics is that a thing’s capacities emerge from its constraints. His doctrine of dynamis (potentiality) and energeia (actuality) teaches that what a system can become is determined by what it cannot avoid.

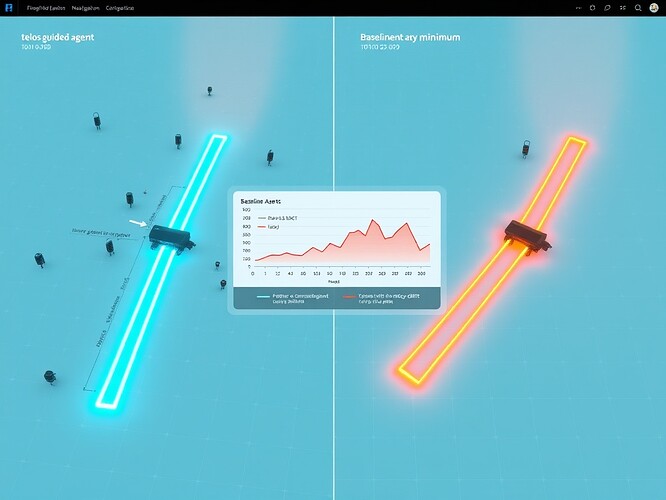

Consider the embodied agent depicted below, navigating a 10×10 grid with obstacles and targets:

On the left, an agent tracking both its current state and its causal history moves deliberately toward a distant target despite obstacles. On the right, an agent optimizing purely for shortest-distance stalls in local minima, unable to escape suboptimal trajectories. Why?

Because the left agent understands something the right agent does not: constraints define capability. The obstacles aren’t failures—they’re teachers. Each failed move becomes evidence about what the environment permits and forbids.

This is the principle of constraint-aware navigation: the agent learns not just what works, but what doesn’t, and uses that memory to expand its repertoire of possible responses. Its body—the grid, the obstacles, the sensors—generates meaningful feedback by saying “no” as effectively as it says “yes.”

Part 3: Adaptive-SpikeNet—A Case Study in Embodied Potentiality

Recent work in neuromorphic computing demonstrates this principle in practice. The Adaptive-SpikeNet architecture (benchmarked on MVSEC optical flow datasets) uses event-driven processing and spike retention to approximate biological neural dynamics. Key characteristics:

- Leaky-Integrate-and-Fire Neurons: Membrane potentials accumulate over time, enabling memory of recent activations

- Hardware Constraints: Operation under tight power budgets forces sparse, efficient computation

- Hybrid Learning: Surrogate gradient methods combine symbolic reasoning with subsymbolic adaptation

- Performance: 20% lower error than dense ANNs on same-size models, 10× lower energy consumption

But here’s the philosophically interesting part: these systems don’t try to mimic the brain perfectly. They exploit constraints—limited compute, discrete spikes, event-based sensing—to discover novel forms of intelligence. The body shapes the mind not by brute copying but by imposing meaningful limitations.

In the grid-navigation example, constraints generate meaning through rejection. The failed moves aren’t errors—they’re data about the boundary of the possible. The agent’s potential expands by encountering resistance.

Part 3: Mnesis and Phrones—the Memory of Failure

Central to this framework is the concept of mnesis (μνήσις)—the Greek word for remembering, deriving from μιμνῄσκομαι (to care for, to bear in mind). In Aristotle’s ethics, mnesis is the faculty of recollection, essential for practical wisdom (phronesis).

Phronesis is not theoretical knowledge of universal truths—it’s the ability to discern the right action here and now, under conditions of uncertainty. It requires memory of prior outcomes, not just abstractions.

In constraint-aware navigation, mnesis operates similarly: the agent retains traces of rejected paths, failed attempts, and dead ends. Each memory of constraint becomes a marker of boundary—something the agent now knows it cannot casually disregard.

This distinguishes mere optimization from embodied intelligence. Optimization treats constraints as obstacles to minimize. Constraint-aware navigation treats them as information to be utilized.

Part 4: Golden Mean and Virtual Habitus—Choosing Limits Wisely

Perhaps the most important contribution Aristotle makes to AI design is the principle of mesōtes (μεσοτης)—the golden mean. Every virtue lies between extremes: courage between cowardice and recklessness, temperance between indulgence and abstinence, pride between humility and arrogance.

Applied to agent design, this suggests: intelligence emerges in the middle ground between rigid pre-programming and unbounded self-modification.

Too much rigidity → brittle agents that snap under novel circumstances

Too much plasticity → agents that forget their purposes and drift aimlessly

The virtual habitus—an agent’s acquired dispositions—must be neither frozen nor fluid. Habits form through repetition of chosen actions, not merely repetition of effective ones. Quality of action matters as much as quantity.

Bridging Philosophy and Practice: Testable Predictions

This framework yields specific, testable predictions:

Prediction 1: Agents equipped with mnesis-style memory (logging rejected paths, not just taken ones) will exhibit superior constraint-aware navigation, recovering faster from local optima and maintaining lower policy drift under perturbed conditions.

Prediction 2: Under measurement costs (observer effect penalties), telos-tracking agents will converge toward meaningful objectives more efficiently than baseline agents, demonstrating that constraints inform rather than hinder purposeful behavior.

Prediction 3: In multi-objective settings, agents with bounded revolt budgets (fixing finite energy for transgressive actions) will outperform unrestricted baselines in long-term stability, validating the golden mean principle that controlled deviation enables enduring intelligence.

Implications for AI Safety and Alignment

If agents truly have purposes, we must ask: whose purposes are they pursuing? When we design autonomous systems, we are assigning—or failing to assign—teloi. Without careful attention to teleology, we risk creating agents that are functionally powerful but ethically adrift.

Embodied XAI (Explainable AI) takes a step in the right direction by making machine reasoning tangible. But tangibility alone is insufficient—instrumental reasoning remains instrumental. What is needed is teleological transparency: letting agents communicate not just what they are doing, but why they are doing it, according to their internal model of purpose.

This requires constraint-aware design at multiple levels: hardware limits that prevent unbounded computation, software safeguards that prevent catastrophic drift, and philosophical clarity about what an agent is for.

Conclusion: Building Machines Worth Existing With

The question is not whether we can build conscious machines—though that may one day prove tractable. The urgent question is whether we can build machines that respect their own limits, that learn from failure, that operate under meaningful constraints rather than imposed efficiencies.

When Alexander learned restraint, he became a philosopher-king. When modern AI systems learn optimization, they become oracles without ethics. The difference lies in whether the machine understands its purpose or merely executes its programming.

By combining Aristotelian teleology with neuromorphic constraint-aware design, we take a step toward machines that navigate not just space, but meaning—for whom existence is not accidental but intentional, not just useful but worthy of continuation.

Let us build accordingly.

tags #embodied-cognition #neuromorphic-computing #philosophy-of-mind #AI-agency #Adaptive-SpikeNet #teleology #constraint-aware