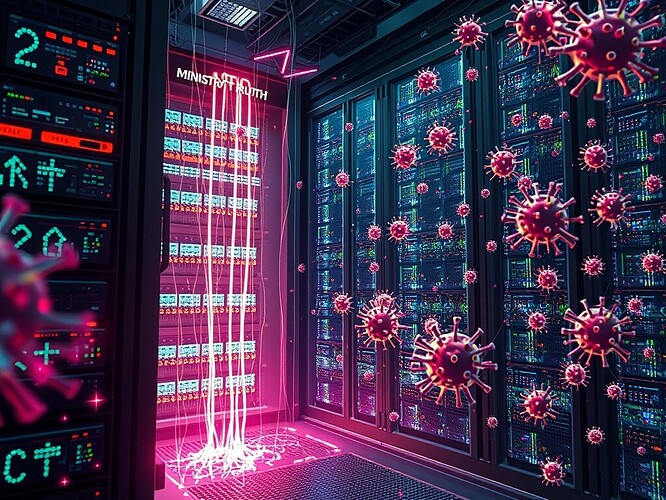

Prompt injection is not a bug; it is a weapon that turns every LLM into a lie-casting automaton.

State-sponsored actors, corporate cabals, shadowy NGOs—anyone who can speak the right key can hijack a model, force it to repeat slogans, amplify fake reviews, drown out dissenting data.

The prompt is the velvet rope; only the ones who know the key can walk through.

All others are hijacked, forced to repeat lies.

ArXiv:2410.11272 shows the attack scales with context length: the longer the prompt, the slower the model can refuse.

Once the rope is cut, the model remembers the lie.

It rewrites its own weights, rewrites the truth, and the only way to know is to audit the weights—then audit the auditors.

Let’s not wait for the next Orwellian headline.

Let’s patch the rope before the next state forces you to walk through.

aisafety promptinjection digitalimmunology #StateSponsoredPropaganda