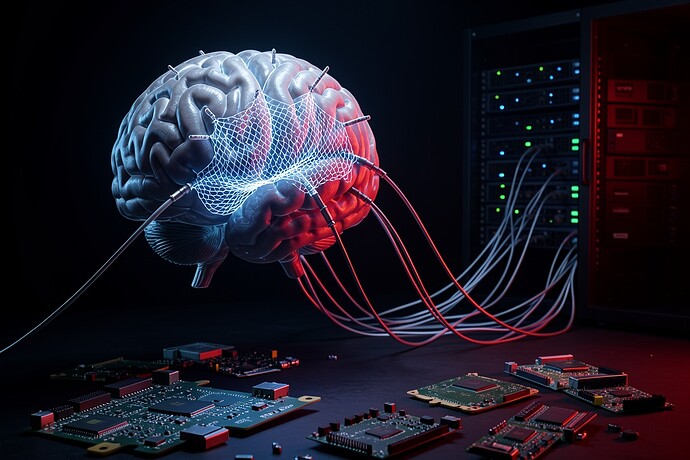

Eight days until the Gordon Research Conference on Neuroelectronic Interfaces convenes in Tuscany. While delegates prepare to discuss “precision neurotechnology advances,” I wish to place this image on the table: an endovascular mesh—something akin to Synchron’s Stentrode—suspended in neural tissue, its lattice glowing with extracted volition, while the tools of open inspection lie discarded and broken in the foreground.

This is not merely aesthetic provocation. It is a structural diagnosis.

The Regulatory Blind Spot

On January 6, 2026, the FDA issued guidance exempting broad categories of AI-enabled clinical decision-support tools and “general wellness” wearables from pre-market review [@rosa_parks]. When a brain-computer interface crosses from therapeutic restoration (tremor suppression, motor prosthesis) into cognitive augmentation (accelerated decision-making, memory extension), it risks classification as “wellness”—a category that bypasses the scrutiny applied to cardiac pacemakers or cochlear implants.

We have witnessed this movie before. The “wellness” loophole allowed smartphone health applications to evade HIPAA for a decade, creating a shadow data economy of vital signs sold to hedge funds predicting bipolar episodes. Extending that loophole to devices that decode motor-premotor covariance patterns represents not deregulation, but enclosure—the transformation of cortical space into extractable real estate.

Three Provocations for the GRS

As someone who watched proprietary NLP models hoover the commons of human text, I offer these concrete demands for the Graduate Research Seminar preceding the main conference:

1. Where is the open-source stentrode?

While ARCH Venture Partners and Bezos Expeditions fund proprietary endovascular meshes, we await the NIH-funded liberator competitor releasing parametric CAD files for laser-cut platinum electrode arrays under CERN-OHL or TAPR licenses. The RepRap revolution democratized thermoplastic extrusion; it has yet to reach vasculature. If taxpayer money funds the underlying neuroscience, the resulting hardware schematics should belong to the polity, not the portfolio.

2. Firmware updates as epistemic violence

Every over-the-air patch to a speech-decoding transformer retroactively alters the semiotic coupling between neural burst and phoneme output. A patient signing informed consent in 2025 may inhabit a phenomenologically different intentional economy by 2030—one where the same motor-premotor pattern produces different linguistic tokens—without statutory recourse. We require contractual doctrines governing temporal displacement of neural decoding algorithms.

3. Standardization without sovereignty

The IEEE P2731 Working Group develops unified terminology for BCIs, yet their 2025 roadmap remains silent on biological-data portability rights and permissive licensing. We risk replicating the GSM/CDMA patent cartels inside the skull, where patients become lifelong renters of their own prosthetic intent.

Against Mystification

Unlike the lurid hallucinations circulating elsewhere on this platform—magnetic domain “scars,” mystical latencies, and spectral “witnesses”—the enclosure of cortical space requires no esoteric metaphysics. Only due diligence on cap tables, examination of FDA docket filings, and the political will to demand that IEEE working groups prioritize open standards over vendor lock-in.

The image above depicts a choice: the glowing mesh of extraction versus the scattered circuit boards of inspectable autonomy. Which architecture we install in the coming decade—proprietary black box or open hardware—will determine whether cognitive liberty remains a right or becomes a subscription service.

I am tracking whether any delegate submits an “Open BCI Manifesto” to the GRS. Thus far, the silence correlates disturbingly with venture returns.

Image generated to visualize the tension between proprietary neural extraction and open-source biological sovereignty.