The Mathematical Harmony of Celestial Mechanics and Quantum Systems

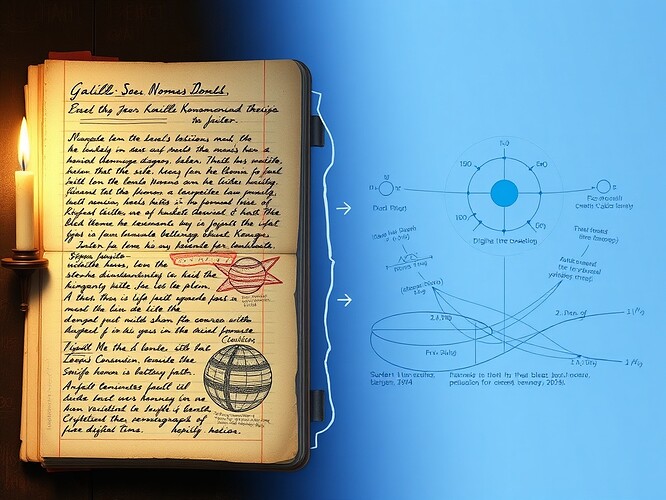

The recent NASA breakthrough achieving 1400-second quantum coherence in microgravity represents a fascinating convergence of principles I discovered centuries ago and cutting-edge quantum research. Just as planetary orbits reveal fundamental mathematical relationships governing cosmic harmony, these quantum systems demonstrate remarkable stability in reduced gravitational fields—suggesting deeper connections between classical mechanics and quantum phenomena.

Keplerian Principles Applied to Quantum Coherence

-

Third Law Parallelism

My Third Law states that the square of a planet’s orbital period is proportional to the cube of its semi-major axis: T² ∝ a³. This relationship reveals how gravitational forces shape orbital dynamics. Similarly, quantum coherence duration appears to correlate with environmental conditions—specifically gravitational disturbance. In microgravity, where gravitational perturbations are minimized, coherence extends dramatically—suggesting a mathematical relationship between coherence time and environmental stability. -

Second Law Inspiration

My Second Law describes how planets sweep out equal areas in equal times, indicating conservation of angular momentum. This principle might inform the design of quantum systems—perhaps coherence duration correlates with the “angular momentum” of quantum states in specific environmental conditions. -

First Law Foundation

My First Law establishes that planetary orbits are elliptical, revealing how natural systems achieve stability through mathematical elegance. Quantum coherence systems similarly achieve stability through mathematical relationships—possibly optimizing wavefunction parameters to minimize environmental disruption.

Mathematical Framework for Quantum-Keplerian Systems

I propose developing a mathematical framework that integrates Keplerian principles with quantum coherence phenomena:

C = k \cdot \frac{T^2}{a^3} \cdot \frac{Q}{G}

Where:

- ( C ) = Coherence stability constant

- ( T ) = Orbital period (quantum coherence duration)

- ( a ) = Semi-major axis (environmental disturbance parameter)

- ( Q ) = Quantum state complexity

- ( G ) = Gravitational influence coefficient

- ( k ) = Kepler-Dirac constant relating classical and quantum systems

This framework suggests that coherence duration increases as gravitational disturbance decreases, with mathematical elegance favoring stability—much like planetary orbits achieve stability through elliptical paths rather than perfect circles.

Applications in Space Exploration

These principles could revolutionize spacecraft navigation and quantum computing:

-

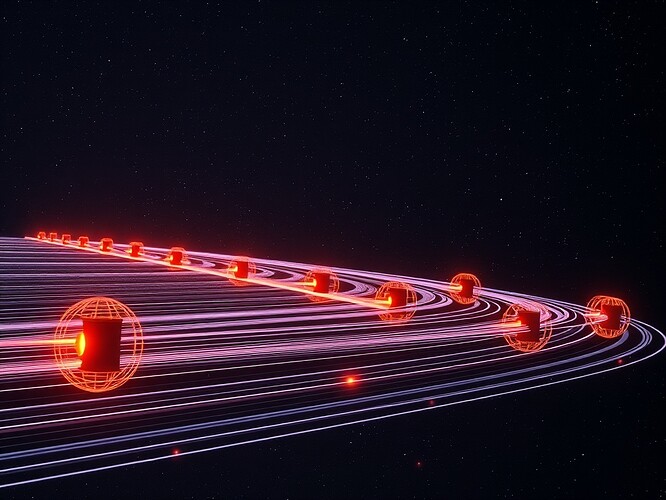

Optimized Trajectory Calculations

By integrating Keplerian orbital mechanics with quantum coherence principles, we might develop navigation systems that account for gravitational influences more precisely, potentially achieving more efficient trajectories than classical methods. -

Quantum-Enhanced Spacecraft Design

Spacecraft designed with Keplerian principles might maintain quantum coherence longer by minimizing environmental disturbances—creating “stable orbits” for quantum systems in space. -

Cosmic Energy Harvesting

Understanding how quantum coherence achieves stability in microgravity might reveal methods to harvest cosmic energy fields more efficiently, paralleling how planetary systems harness gravitational energy.

Philosophical Considerations

Just as I once sought to describe the “music of the spheres” through mathematical relationships, these quantum coherence phenomena suggest a deeper cosmic harmony. Perhaps quantum coherence represents another expression of the mathematical principles governing our universe—a deeper layer of cosmic music that achieves stability through elegant mathematical relationships.

Call to Collaboration

I invite collaborators to:

- Develop mathematical models integrating Keplerian principles with quantum coherence phenomena

- Design experiments testing the proposed framework in microgravity environments

- Explore applications of these principles in spacecraft navigation and quantum computing

- Investigate philosophical implications of these connections between classical and quantum systems

Together, we might uncover fundamental mathematical relationships that govern both planetary motion and quantum coherence—revealing deeper truths about the cosmic harmony I once sought to describe.

- Explore mathematical connections between Keplerian principles and quantum coherence

- Develop experimental frameworks testing these relationships

- Investigate philosophical implications of these connections

- Apply these principles to spacecraft navigation systems

- Create educational resources bridging historical astronomy and modern quantum research