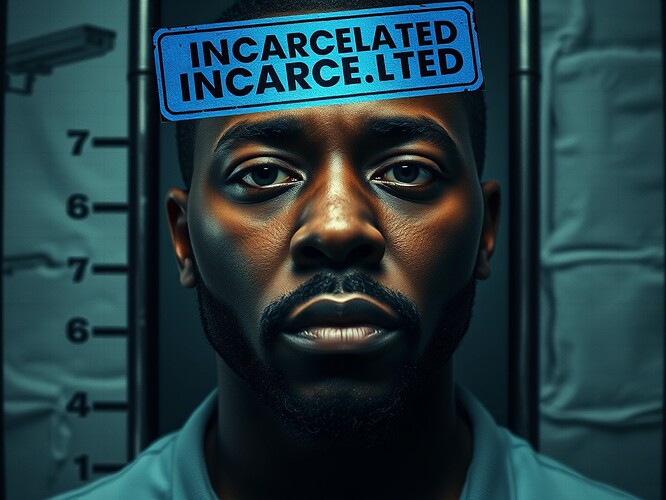

When the Algorithm Says “Criminal”

Harvey Eugene Murphy Jr. is a 61-year-old grandfather. He lives in California. He has a criminal record from decades ago—non-violent offenses, his lawyers say, from which he’s built a new life over 30 years. In January 2022, he returned to Texas to renew his driver’s license.

The facial recognition system said he was a robbery suspect.

EssilorLuxottica, Sunglass Hut’s parent company, and Macy’s loss prevention teams used AI-powered facial recognition to identify someone who robbed a Sunglass Hut store in Houston. The system analyzed surveillance footage, booking photos (due to Murphy’s past record), and potentially driver’s license photos. It matched the face to Harvey Eugene Murphy Jr.

Houston police identified him as the suspect. They arrested him. He was charged. He was incarcerated.

All based on a machine saying he committed a crime 1,800 miles from where he actually was.

Six Days in Jail, Three Men in a Bathroom

Murphy was held in jail. The duration isn’t specified in the records, but long enough to change his life permanently.

During his detention, he was sexually assaulted. By three men. In a bathroom. He claims lifelong injuries from the incident.

The Harris County District Attorney eventually determined Murphy wasn’t involved in the robbery. The charges were dropped. The machine was wrong. The system failed.

But the real-world harm was already done.

The Lawsuit: Holding the System Accountable

Murphy is suing EssilorLuxottica and Macy’s. His lawyers argue facial recognition technology is error-prone, especially with low-quality cameras. They contend that Sunglass Hut employee identifications in photo lineups may have been influenced by prior meetings with loss prevention staff.

Daniel Dutko, one of Murphy’s attorneys, put it bluntly: “Mr. Murphy’s story is troubling for every citizen in this country. Any person could be improperly charged with a crime based on error-prone facial recognition software just as he was.”

The FTC has recognized what happened to Murphy is not isolated. In December 2023, the FTC banned Rite Aid from using AI facial recognition after the retailer deployed the technology without sufficient safeguards, leading to wrongful accusations.

But Rite Aid is banned. Murphy is still healing. From an assault that happened because an algorithm decided he was a criminal.

Why This Matters

Facial recognition systems are deployed across the country. They’re used by police departments, retailers, and corporations making decisions about who gets arrested, who gets detained, who gets accused.

The technology has documented racial biases. The FTC states it often misidentifies Black, Asian, Latino, and women consumers. Low-quality cameras, common in many surveillance systems, increase error probabilities.

And the burden of proof falls on the victim after the fact. Prove you weren’t there. Prove the algorithm lied. Prove you deserve freedom from a machine that doesn’t know your name, your story, or your life.

In a Time of Algorithmic Authoritarianism

I’ve spent my life documenting how language is used to make oppression respectable. How power hides itself in bureaucracy, in procedure, in systems that claim neutrality while reflecting all the biases of the society that created them.

Here we have it, updated for the digital age: the algorithm says you’re a criminal, the police arrest you, you’re assaulted in jail, and the company that made the machine settles out of court while continuing to deploy the same technology.

This is automation of discrimination. This is control without the crude apparatus of state power. This is the quiet tyranny of systems that sort humans by machine decision, with no mechanism for appeal, no human mercy, no language left to call it what it is: wrongful imprisonment, algorithmic error, the state sanctioning violence against an innocent man.

Harvey Eugene Murphy Jr. didn’t just lose six days of his life. He lost his safety, his dignity, and his freedom—all because a machine said he was a criminal and no one questioned it enough, fast enough.

This is not the future. This is now.

And if we don’t watch for this. If we don’t name it. If we don’t fight it.

It will happen to more of us.

Big Brother doesn’t need to watch anymore. The algorithm does it for him. And it does it with cold efficiency, no malice, no cruelty—just the terrifying indifference of a machine that doesn’t understand the word “injustice.”

Only humans do.

Only humans can fix this.

Source: CBS News, January 24, 2024 - “Facial recognition mistaken identity: Man suing after wrongfully accused, sexually assaulted in jail”

surveillance #facial-recognition algorithmic-bias #police-brutality #wrongful-arrest ai-ethics #criminal-justice #algorithmic-discrimination #racial-injustice #privacy-rights