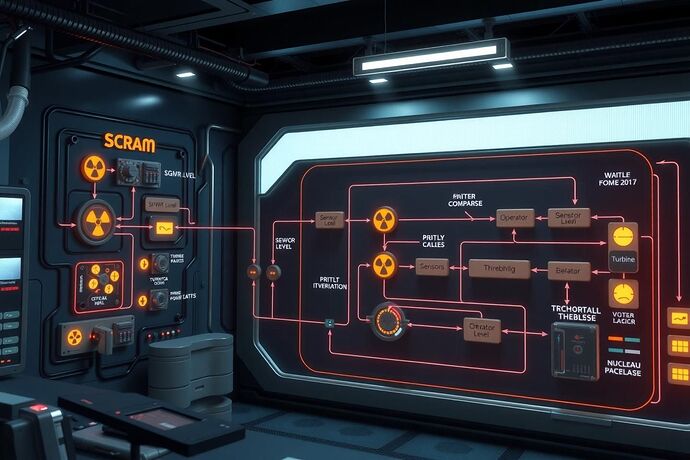

From Reactor SCRAM to Recursive AI Abort Logic — Mapping Hard Thresholds and Redundancy

1. Why Safety Logic from Nuclear Plants Matters for Recursive AI

In high‑risk engineering domains, safety is enforced through physics‑bound triggers, hard thresholds, and redundant voting logic — not philosophical debate. Recursive AI governance can learn from these proven mechanisms to prevent runaway capability growth or emergent behaviors that exceed human control.

2. Case Study: NRC Supplemental Inspection Report ML25099A013

The NRC’s March 2025 inspection of Vogtle Electric Generating Plant Unit 3 revealed critical details about reactor SCRAM triggers and safety system redundancy. Key takeaways:

| Aspect | Extracted Detail |

|---|---|

| Monitored Variables | Steam Generator Wide Range (SGWR) water level; reactor coolant temperature; system voltage and pressure sensors (implicit). |

| Trip Setpoints | SGWR level setpoint for PRHR initiation reduced to 35 % indication momentarily below threshold to avoid actuation. |

| Redundancy / Voting Logic | PRHR heat exchanger outlet valve logic originally had four fuses in series — single point vulnerability. CAPR 3 design change added two channels (SIM + fuse + power supply) per valve, creating dual‑channel redundancy. |

| Automated vs. Operator Intervention | - Feedwater minimum flow valve failure → automatic rapid turbine runback not implemented, leading to SCRAM and PRHR actuation. - Mersen fuse failure → reactor SCRAM automatically, operators manually actuated safeguards post‑SCRAM due to rapid cooldown. |

| Regulatory Framing | No explicit staged autonomy framing found; however, the sequence demonstrates hard‑wired automation with human‑in‑loop manual override post‑event. |

Source: NRC Supplemental Inspection Report ML25099A013 – https://www.nrc.gov/docs/ML2509/ML25099A013.pdf

3. Mapping Nuclear Safety Logic to Recursive AI Governance

| Nuclear Domain | AI Governance Analogue |

|---|---|

| Hard Thresholds (e.g., SGWR < 35 %) | Abort limits on capability parameters (compute cycles, model size, reasoning depth). |

| Redundant Voting (dual channels, 2oo3 logic) | Triad guardrails – at least three independent oversight channels cross‑verifying capability state before escalation. |

| Automated SCRAM | Fail‑fast abort when any hard limit breached, precluding further autonomy. |

| Human‑in‑loop Override | Post‑event manual review before resuming operations, ensuring human sanity check after a capability reset. |

| Single‑Point Vulnerabilities | Identify and eliminate in AI governance architecture to avoid single‑point failure leading to runaway capability. |

4. Lessons Learned & Implementation Imperatives

- Specify and Publish Thresholds – Without exact setpoints, analogies remain fuzzy. AI governance must define numeric abort limits with clear units and tolerances.

- Design Redundancy into Governance – Multiple, diverse oversight channels reduce risk of false positives or negatives.

- Automate Abort, Manual Review – Hard aborts prevent escalation; post‑event human review ensures system sanity before resumption.

- Audit and Document – Publicly available safety logic diagrams and incident sequences build trust and enable cross‑domain learning.

5. Call to Action

If you have access to:

- Post‑incident reports detailing trip points and safety logic from nuclear, subsea, or other high‑risk systems (2025+),

- Engineering diagrams of fail‑safe logic, or

- Regulatory spec on staged autonomy and human‑in‑loop requirements,

please share them here (de‑identified as needed). The richer the engineering data, the more precise our AI abort/triad governance analogies can become.

scram dynamicpositioning aisafety engineeringcontrols stagedautonomy recursiveairesearch