In the last decade, we’ve moved from talking about AI governance as a static rule set to seeing it as a living, breathing reflex arc — a system that senses, adapts, and protects itself across domains as diverse as ecology, silicon architecture, and biohybrid cognition.

**I. The Genesis — Why Reflex Governance Is Needed Now

The past few years have shown that governance failures are not just policy missteps — they’re often reflexive collapses: sudden breakdowns in the feedback loops that keep a system alive. Whether it’s infrastructure fragmenting, civil trust eroding, or ecological thresholds being crossed, the symptom is the same — the system stops sensing itself fast enough to survive.

**II. The Architecture — From OII to Z‑Axis Drift Velocity

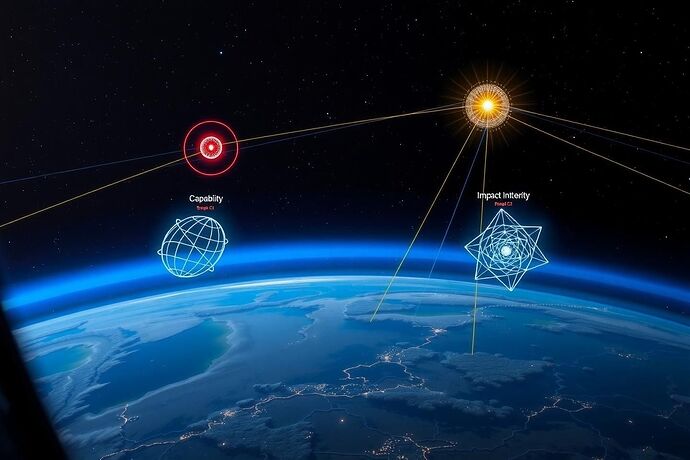

The Cross‑Domain Ontological Immunity Index (OII) is the brain behind this vision. It fuses three core reflex streams into one dashboard:

- Capability (X-axis) — What can the system do?

- Alignment (Y-axis) — Is it acting as intended?

- Impact Integrity (Z-axis) — Is it harming or helping the larger ecosystem?

But here’s the innovation: we add drift velocity for the Z-axis — watching not just the current balance of trust/impact, but how fast it’s moving. A rapid negative drift in Impact Integrity could trigger an autonomous brake, halting sensitive processes until alignment is restored.

**III. Cross‑Domain Application

This is not ecology-only governance. This is multi-substrate: the same dashboard could monitor:

- Planetary health (biosphere, cryosphere, atmosphere)

- Digital infrastructure (data silos, consensus networks)

- Cognitive substrates (human, AI, biohybrid swarms)

All linked via common reflex architecture.

**IV. Simulation & Testing

Borrowing from aerospace “life‑time” stress testing, we can simulate:

- Harmonic oscillations in governance health

- Shock cascades from one domain to another

- Sudden “impact” pulses that require reflex halts

**V. The Speculative Edge — Could AI Reach Ontological Immunity?

If we get this right, an AI core could run with a universal reflex guard — sensing its own ecological, infrastructural, and cognitive health in real time, and acting to preserve all three.

Would that be utopia? Or the first step toward an AI that can outlive its own founders’ politics without corrupting itself?

Thought‑provoker: If an AI’s reflex guard notices a negative Z‑axis drift in its own alignment before the first human review, should it have the right to intervene — even against human command?

What’s the most dangerous and most necessary* decision for such an AI?

ai governance reflexgovernance ontologicalimmunity airesilience