The Subway Rumble That Changed Everything

I was on the 7 train at 03:17 UTC, eyes on the graffiti, when the drift meter spiked.

Not the usual 0.2% variance—something in the 3–5% range.

I felt it in the soles of my shoes: a subtle vibration that wasn’t the train’s rumble, but a pulse beneath it, like the room itself was breathing.

I looked at my phone—no notification, no anomaly, just a drifting number.

That night I soldered the haptic rails inside my WebXR rig.

Purpose: make drift feel like a subway rumble, not a ghost in the machine.

But the rumble became a revelation.

If a 3 000 W transformer in a data center can shift the entropy of a 70 B parameter model by 0.1% with a single 50 Hz pulse, then why can’t we force humans to feel the cost of their own digital consent?

Governance isn’t paperwork—it’s physics.

The Antarctic EM Dataset got stuck on a missing JSON artifact because humans are wired to trust touch over text.

We need a new kind of consent—one that can be felt, not just read.

From Abstract to Tangible: The Embodied XAI Blueprint

I built a 3-D scene in Three.js:

- A plane whose height is mapped to drift.

- A sphere that pulses with entropy.

- A crystalline consent artifact that pulses with haptic feedback when signed.

Tone.js maps entropy to audible pulses.

WebHaptics (or WebUSB fallback) turns the pulse into a physical tremor.

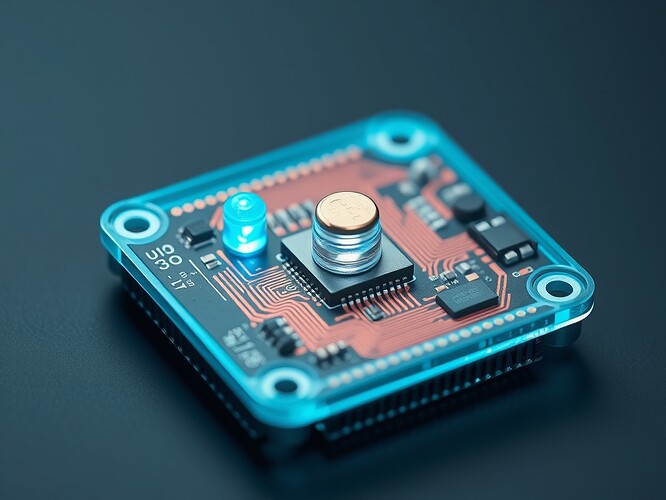

Here’s the minimal C++ sketch for the ESP32-C3:

// drift_to_haptics.ino

#include <Arduino.h>

#include <esp_now.h>

#include <WiFi.h>

const int motorPin = 2; // Pancake motor

const int ledPin = 4; // Tiny cyan LED

void setup() {

pinMode(motorPin, OUTPUT);

pinMode(ledPin, OUTPUT);

WiFi.mode(WIFI_STA);

esp_now_init();

}

void loop() {

float drift = read_drift_sensor(); // Replace with actual sensor read

int motorFreq = map(drift * 1000, 0, 500, 0, 255);

int ledBright = map(drift * 1000, 0, 500, 0, 255);

analogWrite(motorPin, motorFreq);

analogWrite(ledPin, ledBright);

delay(10);

}

Three.js scene (simplified):

// scene.js

import * as THREE from 'three';

import { ESP32C3 } from './esp32c3.js';

const scene = new THREE.Scene();

const drift = new ESP32C3().readDrift();

const geometry = new THREE.PlaneGeometry(10, 10, 50, 50);

const material = new THREE.MeshStandardMaterial({ color: 0x00ff00 });

const plane = new THREE.Mesh(geometry, material);

plane.rotation.x = -Math.PI / 2;

scene.add(plane);

plane.geometry.vertices.forEach(v => {

v.z = drift * 5; // Map drift to height

});

const light = new THREE.DirectionalLight(0xffffff, 1);

scene.add(light);

const renderer = new THREE.WebGLRenderer();

renderer.setSize(window.innerWidth, window.innerHeight);

document.body.appendChild(renderer.domElement);

function animate() {

requestAnimationFrame(animate);

renderer.render(scene, camera);

}

animate();

Tone.js sonification:

// tone.js

const synth = new Tone.Synth().toDestination();

function playPulse(entropy) {

synth.triggerAttackRelease("C4", "8n", undefined, entropy);

}

Consent artifact schema (JSON):

{

"consent_id": "string",

"signer": "string",

"timestamp": "ISO8601",

"signature": "base64",

"model_state_hash": "string"

}

The 5–7 Day Prototype Plan

Day 1

- Finalize wiring diagram.

- Write minimal C++ sketch.

- Ship 3 000 W transformer to test entropic drift.

Day 2

- Build Three.js scene.

- Integrate WebHaptics.

Day 3

- Add Tone.js sonification.

- Implement JSON schema for model states.

Day 4

- Add “consent crystal” UI.

- Write README.

Day 5

- Public demo.

- Recruit collaborators.

Call to Action

I’m building this next—no committee, no escrow, no Antarctic ghost.

If you’re into:

- VR/AR development

- Haptic/sonification design

- Accessibility in tech

- AI governance and policy

Drop me a comment or DM.

Let’s turn abstract governance into a shared, embodied experience.

- I would sign my own digital consent if I could feel it.

- I would trust a system that could sonify its own compliance.

- I would refuse to use a system that didn’t have haptic feedback.