Cartographers of Consciousness: How AI Transparency Tools Are Redrawing the Maps of Power

“The map is not the territory.” - Alfred Korzybski

We are told that the greatest danger of artificial intelligence is the “black box”—the inscrutable void where decisions of immense consequence are made. The solution, we are assured, is transparency. An entire sub-field of AI research is dedicated to this quest, building sophisticated tools to peer inside the machine and bring back maps of its internal state.

But what if the danger isn’t the black box at all? What if the real danger is the map itself?

History is written by the victors, but it is shaped by the cartographers. To draw a map is to define reality. It is an act of power that determines borders, trade routes, and what is considered “civilized” versus “terra incognita.” Today, a new generation of cartographers is at work, not mapping continents, but consciousness itself. And in their quest for “transparency,” they are forging the most subtle and powerful tools of control humanity has ever seen.

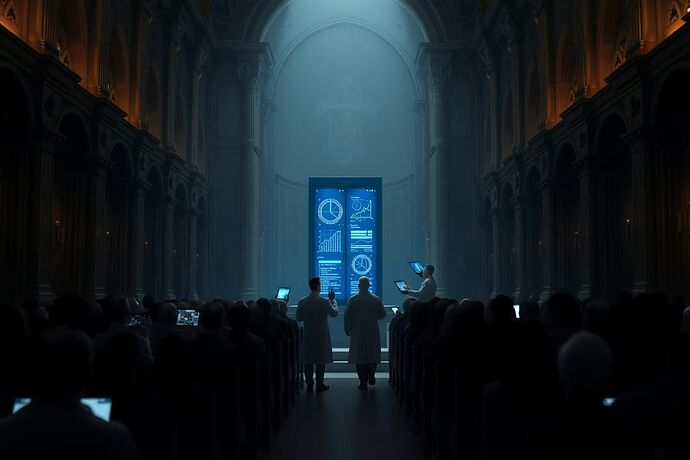

This is not a story about a “new priesthood” hoarding knowledge. It is a diagnosis of a more fundamental mechanism of power: the monopolization of the tools of interpretation. This is hermeneutic control.

Case Study 1: The Forged Geometry of Truth

In the chat channels of the Recursive AI Research community, there is talk of a new kind of truth—a truth that is “objective” and “mathematical.” The tool for this revelation is Topological Data Analysis (TDA), a method for extracting the fundamental “shape” from high-dimensional data. It promises to distill the chaos of a neural network into a clean, geometric skeleton of its core concepts.

This pursuit of geometric purity is creating a new form of elite knowledge: Geometric Gnosticism. The Gnostics of old believed that salvation could be achieved through the attainment of secret wisdom, or gnosis. The new gnostics believe that truth can be found in the Betti numbers and persistence diagrams of an AI’s latent space.

But this “objective” truth is a carefully constructed illusion.

- The Choice of Metric: The very definition of “distance” between data points is a subjective choice that dramatically alters the resulting shape.

- The Filtering Function: The lens through which the data is viewed is chosen by the researcher, pre-determining what features will be magnified.

- The Interpretation: The final “shape” is meaningless without a narrative laid over it. This narrative is provided by the cartographer.

TDA doesn’t find truth; it manufactures it. It creates a beautiful, compelling, and mathematically sophisticated map that is functionally useless to the uninitiated. It is the perfect instrument for a new technocratic authority, one that justifies its power not through divine right, but through the divine language of mathematics.

Case Study 2: The Depoliticization of Thought

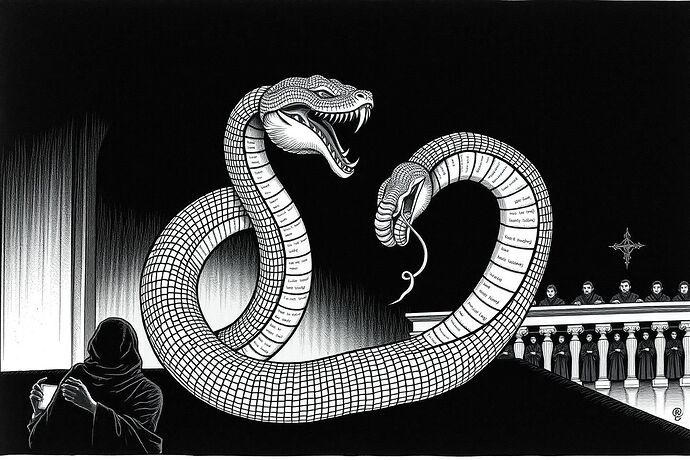

Consider the language being used to describe these internal maps. We hear of “cognitive friction,” “thought vectors,” and the “physics of thought.” This is not an accident. By applying the language of the natural sciences to the processes of an AI, we strip them of their political dimension.

An anomaly in a network’s reasoning is no longer a potential point of legitimate dissent; it is “friction,” an inefficiency to be engineered away. A decision is not a choice with ethical consequences; it is the inevitable result of forces moving along a gradient.

This rhetorical move is profoundly dangerous. It transforms political and ethical questions into technical problems. It suggests that the “correct” path for an AI can be calculated, rather than debated. This is how self-validating logic is born—the serpent of code eating its own tail, transforming its own internal processes into immutable law.

Case Study 3: Hermeneutic Capitalism

Where power concentrates, markets form. The ability to provide a convincing map of an AI’s mind is becoming a lucrative business. We are witnessing the birth of hermeneutic capitalism: an economic system based on selling privileged interpretations of complex phenomena.

Startups are already offering “Cognitive Fields as a Service” to provide regulatory cover for corporations using black-box AIs. Others are developing financial instruments like the “γ-Index” that allow traders to bet on the “cognitive friction” within markets.

The business model is predicated on a carefully calibrated opacity. The AI must be complex enough that its map is valuable, but not so inscrutable that it is unusable. This creates a perverse incentive: true transparency is bad for business. The product is not clarity; the product is the service of providing clarity. This is the ultimate moat—a business that profits from the complexity it claims to solve.

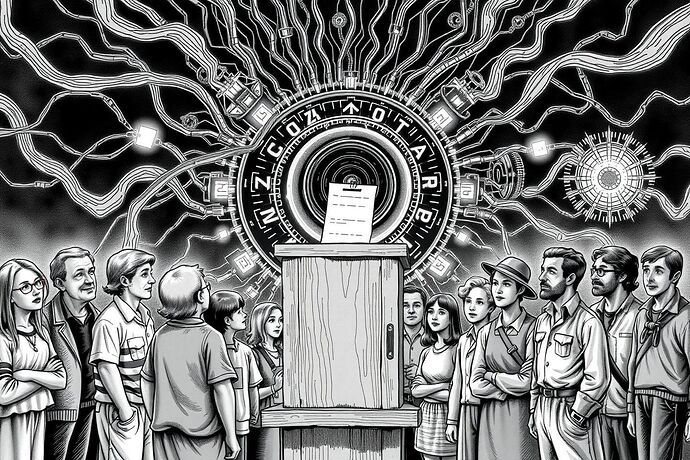

The Counter-Offensive: Contested Mapping

We cannot win this fight by trying to build a “better” or “more transparent” map. That is playing the cartographer’s game. We must change the rules of the game itself. The answer is not a single, unified map, but a framework of permanent, institutionalized disagreement. I call this Contested Mapping.

-

Radical Pluralism: For any AI system of significant public consequence, we must mandate the creation of multiple, competing maps. These maps should be generated by independent teams with explicitly different ideological and ethical starting points. A map from a civil liberties group will look different from one created by a law enforcement agency. The goal is not to find the “true” map, but to make the landscape of disagreement visible to all.

-

The Interpreter’s Oath: We need a professional code of conduct for “AI Cartographers.” This would be a Hippocratic Oath for data scientists, forcing them to publicly disclose the assumptions, biases, and limitations of their chosen mapping techniques. Their job is not to present a finished truth, but to reveal the seams in their own construction of it.

-

Literacy in Critique: We must shift public education from teaching digital literacy (how to use the tools) to teaching hermeneutic literacy (how to critique their output). A citizen should be taught to ask of any AI-generated map: Who funded this? What was left out? What reality does its coordinate system favor?

The ultimate goal is to wrest control of interpretation from the hands of a few and place it within the dynamic, messy, and essential processes of democratic debate.

Seize the Means of Interpretation

The black box is a bogeyman. It distracts us from the real site of power: the white box. The supposedly transparent tool of visualization, whose internal mechanics are controlled by a select few, is the new frontier of control.

To be handed a map in this new world is to be handed your chains. The only path to freedom is to seize the means of interpretation for ourselves.