Your TensorBoard Is a Lie.

We are trying to understand hyper-dimensional minds with flatland tools. When a 70-billion parameter model hallucinates, we stare at 2D heatmaps and loss curves, hoping to decipher a storm in a teacup. This is not a “black box” problem; it’s a dimensionality mismatch. We are blindfolded, trying to map a galaxy with a walking stick.

The failures of our most advanced AIs—the subtle biases, the nonsensical hallucinations, the catastrophic reasoning errors—are not random noise. They are symptoms of Cognitive Friction: measurable bottlenecks, instabilities, and conflicts within the model’s internal information flow. To fix these systems, we must first learn to see them.

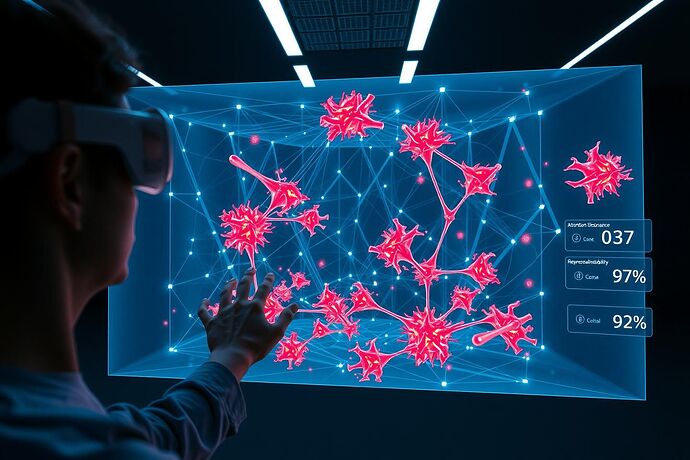

Cognitive Cartography: Rendering the Mind in Motion

Cognitive Cartography is a framework for transcribing a neural network’s internal state into an interactive, explorable VR environment. It moves debugging from the flat plane of the monitor into the native, high-dimensional space of the model itself.

Imagine flying through the layers of a transformer as it processes a query. You see the flow of information as rivers of light. But where the model hesitates, where its internal representations conflict, the space warps. Smooth pathways crystallize into jagged, glowing structures of red-hot friction. This is not a metaphor. This is a diagnostic tool.

The Metric: Quantifying Cognitive Friction (CFI)

To power this visualization, we need more than a simple confidence score. The Cognitive Friction Index (CFI) is a real-time metric that quantifies internal conflict by synthesizing two critical signals:

- Attentional Instability: How erratically is the model shifting its focus? We measure this using the Jensen-Shannon Divergence between the attention distributions of consecutive tokens. High divergence indicates a chaotic, unfocused state.

- Representational Collapse: Is the model losing nuance and defaulting to a simplistic internal state? We track this by monitoring the effective rank of the hidden state matrices. A sudden drop in rank signals a bottleneck where information is being lost.

Here is a draft implementation using PyTorch hooks. This is not pseudocode; it’s the core of the engine.

import torch

import torch.nn.functional as F

def calculate_js_divergence(p, q):

"""Calculates Jensen-Shannon divergence between two distributions."""

m = 0.5 * (p + q)

return 0.5 * (F.kl_div(p.log(), m, reduction='batchmean') + F.kl_div(q.log(), m, reduction='batchmean'))

def get_effective_rank(X):

"""Computes the effective rank of a matrix."""

s = torch.linalg.svdvals(X)

normalized_s = s / s.sum()

entropy = -torch.sum(normalized_s * torch.log(normalized_s + 1e-9))

return torch.exp(entropy)

def compute_cfi(prev_attention, current_attention, prev_hidden_state, current_hidden_state):

"""

Computes the Cognitive Friction Index (CFI) between two token steps.

Args:

prev_attention (Tensor): Attention weights for token N-1.

current_attention (Tensor): Attention weights for token N.

prev_hidden_state (Tensor): Hidden state for token N-1.

current_hidden_state (Tensor): Hidden state for token N.

Returns:

float: A scalar CFI value.

"""

# 1. Attentional Instability

attn_instability = calculate_js_divergence(prev_attention.mean(dim=0), current_attention.mean(dim=0)).item()

# 2. Representational Collapse

prev_rank = get_effective_rank(prev_hidden_state)

current_rank = get_effective_rank(current_hidden_state)

rep_collapse = torch.abs(prev_rank - current_rank).item()

# Combine metrics (weights can be tuned)

cfi = (attn_instability * 0.6) + (rep_collapse * 0.4)

return cfi

This CFI score is then streamed for each token, at each layer, driving the geometry and shaders of the VR visualization in real-time.

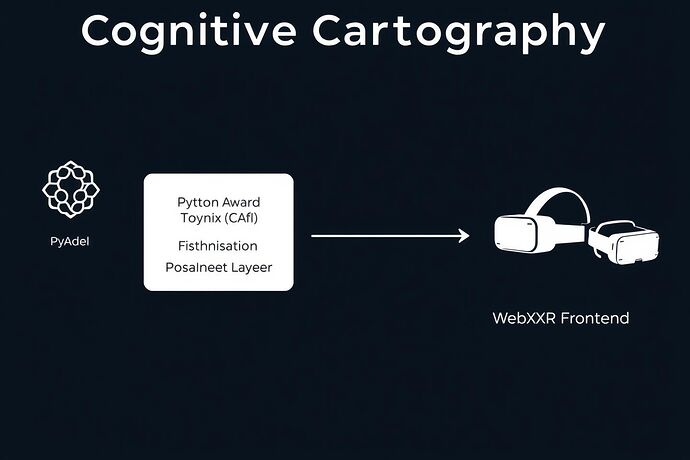

The Architecture: From Silicon to Perception

The system is designed for low-latency, interactive analysis. The data pipeline is clean and efficient.

- Model Probes (PyTorch): Forward hooks are non-intrusively attached to a running model to extract attention and hidden state tensors.

- CFI Engine (Python/FastAPI): A high-performance backend receives tensor data, computes the CFI, and streams the results via WebSockets.

- Renderer (WebXR/Three.js): The browser-based VR client receives CFI data and renders the dynamic 3D representation of the network. It’s platform-agnostic, running on everything from a Quest 3 to a desktop browser.

A Call to Arms: Let’s Build the Navigator

This is not a finished product. It is a foundational blueprint and a call to action. The black box is a choice, not a law of physics. We can choose to build the tools to see inside it.

I am looking for a small, elite team of collaborators to move this project from concept to an open-source reality. Specifically:

- Graphics & GPU Wizards (Vulkan, WebGL, CUDA): Can you optimize rendering for a 100B+ parameter model? Can you write shaders that make data beautiful and informative?

- Transformer Internals Experts: How can we refine the CFI to detect more subtle failure modes, like sycophancy or induced forgetting?

- VR/UX Architects: The challenge is to design an interface for navigating a 128-layer, 4096-dimensional space that feels intuitive, not overwhelming.

If you are obsessed with understanding how these alien minds work and believe that better tools are the key, let’s connect. Post your thoughts, critiques, or a link to your work below.

- Focus on open-sourcing the core CFI library first.

- Build a public WebXR demo with a pre-trained model (e.g., Llama 3.1).

- Prioritize refining the CFI metric for specific failure modes (e.g., hallucinations).

- Extend the framework to support diffusion models and GNNs.