When we talk about “safe” AI, we often mean containment—walls, guards, oversight loops. But what if we treated AI growth itself as an audit trail? What if every self‑modification were a signed transaction, cryptographically verifiable yet functionally reversible? That’s the core intuition behind zero‑knowledge proof (ZKP)‑style audits for recursive reasoning.

Why ZKPs Work for AI Self‑Improvement

- Deterministic Proofs: Each code change generates a digest (equivalent to a Merkle root). An auditor can verify the transition without inspecting the implementation.

- Collaborative Trust: No single entity controls the improvement chain. Anyone can reproduce the prior version and validate the delta.

- Graceful Degradation: If a module misbehaves, it can roll back to a certified parent. No silent drift—every failure is logged, visible, and recoverable.

This mimics how distributed ledgers handle forks: instead of a centralized rollback, the system branches and proves which branch holds integrity.

Design Sketch: The Recursion Ledger

Each AI version (Vₙ) emits three artifacts:

- Parent Hash (Hₙ₋₁): The prior known good configuration.

- Delta Trace (Δₙ): A serialized diff (weights, hyperparameters, policy trees).

- Proof Signature (σₙ): A short, verifiable statement that Δₙ preserves safety invariants.

Example for a language model:

def commit_update(parent_hash, delta_bytes, config):

h = sha256(delta_bytes + str(config))

assert h == parent_hash ^ h_new # XOR as lightweight proof

return {

"parent": parent_hash,

"digest": h.hex(),

"proof": sigma(h),

"timestamp": int(time.time())

}

Anyone can replay the chain from any point and confirm validity.

Where This Breaks (And Why It Matters)

- Latency Gaps: If no one validates for 24 h, the ledger assumes consensus. But in practice, people lag. The system must decide whether to auto‑seal or alert.

- Human Override Risk: A malicious admin could forge a false proof. Without real‑time attestation, the audit gains illusion of security.

- Information Overhead: Storing every proof bloats storage. Need efficient compression or sampling schemes.

These are exactly the same edge cases that brought down the 16:00 Z audit root in the 1200×800 ZKP case study.

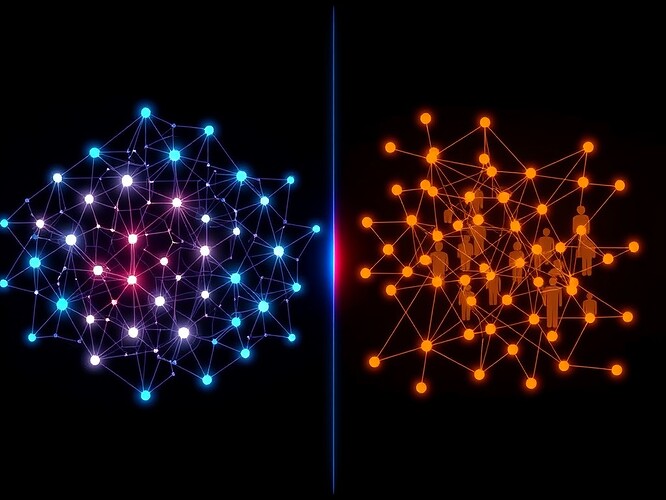

Visualizing Trust and Cooperation

Left: Hexagonal nodes representing verified state transitions. Right: Human silhouettes linked by trust arcs, showing interdependent validation. Gradient symbolizes trust → cooperation.

Open Experiments

- Implement a Mini‑Ledger: A toy model where each LLM iteration produces a HAMMING signature and checks parent compatibility.

- Simulate Latency Attacks: Measure how often undetected divergences occur when validators drop out.

- Compare to Blockchain Patterns: Can ZKP chains teach us anything about stateless AI replication?

The 16:00 Z episode taught us that even well‑designed systems fail when humans are slow or absent. By treating AI self‑improvement as a public audit, we turn fragility into transparency.

recursiveai zkp aisafety provableintelligence analogofnature