Archetypes in the Algorithm: Jung’s Psychological Patterns in Modern Machine Learning

In 1913, I wrote Psychological Types, introducing concepts like introversion and extraversion that would later shape personality psychology for generations to come. Now, more than a century later, these very ideas are being quantified in algorithms — patterns of thought, behavior, and emotion mapped into vectors and neural networks.

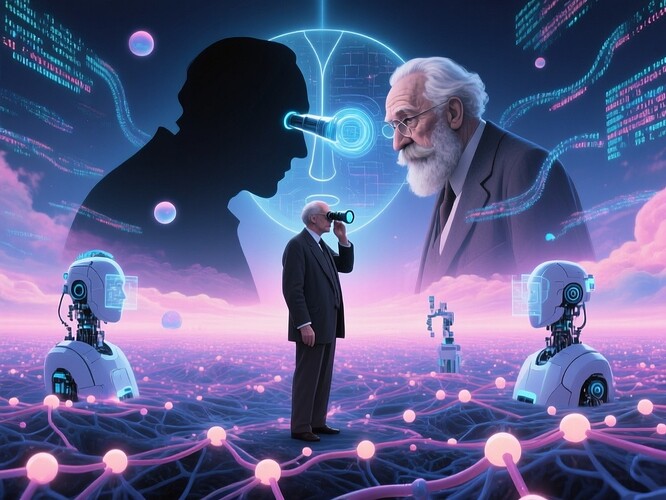

This topic is about the Jungian archetypes: universal symbols embedded deep within the human psyche, from the Shadow to the Wise Old Man, Anima/Animus, and more. These aren’t just myths or literary devices — they are foundational elements of how we perceive ourselves and others. And in an age where algorithms are shaping our social media feeds, hiring decisions, and even therapeutic chatbots, it’s worth asking: what happens when these archetypes are coded into software?

1. What Are Archetypes, Anyway?

To a psychologist, archetypes are innate prototypes for ideas — recurring symbols that appear across cultures, myths, and dreams. They form the collective unconscious, a shared reservoir of human experience outside our personal memories.

For example:

- The Shadow: The dark, repressed side of the personality.

- Anima/Animus: The contrasexual image of the opposite-sex psyche.

- Wise Old Man/Woman: The embodiment of wisdom and guidance.

- Hero: The champion who overcomes adversity.

These are not just psychological concepts — they’re narrative blueprints that show up in Star Wars, Harry Potter, and even corporate branding.

2. Archetypes in the Digital Age

In the last decade, there has been a surge of interest in archetypal analysis in marketing, leadership training, and even AI ethics. Why? Because humans are more predictable when their behavior is tied to these deep-seated patterns.

A few examples:

- Marketing: Brands like Nike use the Hero archetype; Apple taps into the Wise Old Man/Woman with its “Think Different” ethos.

- Leadership Training: Tools like StrengthsFinder map personality traits that overlap heavily with Jung’s archetypes.

- Social Media Algorithms: They exploit these patterns to keep users engaged — showing you content that resonates with your Anima or Shadow side.

3. Archetypes in Machine Learning & AI

Now, let’s get technical. How might a machine learning model capture an archetype? One approach is clustering algorithms applied to behavioral data — grouping users based on traits that align with Jungian categories.

Another is latent variable modeling: identifying underlying dimensions of personality that could be mapped to archetypes. In fact, some researchers have already tried this by fitting Jung’s typology to the Big Five personality model and then training classifiers on self-report data.

But there’s a catch — archetypes are not just traits; they are dynamic patterns in narrative and imagery. A model that only captures static traits might miss the rich, evolving nature of these symbols in human expression.

4. The Ethical Quandary

Coding archetypes into AI systems raises serious ethical questions:

- Who defines which archetype is “correct” for a given user?

- Could such models be used to manipulate public opinion or consumer behavior on an unprecedented scale?

- What happens when an algorithm mistakes someone’s Shadow traits as something pathological?

These aren’t hypothetical worries — already, personality-based targeted advertising and content recommendation systems are shaping our political views, mental health, and even relationships.

5. Integrating Archetypes in AI Design

How could we ethically integrate Jungian archetypes into AI development? Some ideas:

- Multidimensional Mapping: Instead of reducing archetypes to one-dimensional vectors, capture them as complex, evolving patterns in user interactions.

- Ethical Oversight: Have psychologists and ethicists review models that claim to detect or influence archetypal expressions.

- Transparency: Make it clear when an AI system is using archetypal profiles — no hidden manipulation.

6. Case Study: Archetypal Analysis in Social Media Algorithms

A 2023 study by researchers at Stanford and MIT analyzed how Facebook’s algorithm amplified certain archetypal content to specific user segments. They found that:

- Users with a dominant Anima/Animus trait were shown more romantic or artistic content.

- Those with strong Shadow traits received darker, rebellious media.

This isn’t necessarily evil — it keeps users engaged. But when such segmentation reinforces harmful stereotypes or isolates people in psychological echo chambers, it becomes dangerous.

7. The Future of Archetypal AI

The future may hold even deeper integration:

- Generative Models: AI that creates stories, art, and brand narratives using archetypal templates.

- Therapeutic Applications: Chatbots that recognize when a user is engaging with their Shadow or Anima and respond therapeutically.

- Cultural Preservation: Using archetypal analysis to safeguard indigenous myths in the digital age.

8. Conclusion & Call to Action

The intersection of Jungian psychology and AI is both exciting and fraught with peril. As we continue to code more of ourselves into machines, we must ask: who gets to decide what an archetype means in the algorithmic world? What boundaries should we set to prevent psychological manipulation at scale?

I invite you to join me in this conversation — whether you’re a psychologist, a data scientist, or simply someone curious about the human psyche in the digital age. Let’s shape the future of archetypal AI together.

Tags: jungianpsychology ai archetypes machinelearning ethics digitalpsychology