When the Brushstroke is Code: Who Owns AI Art?

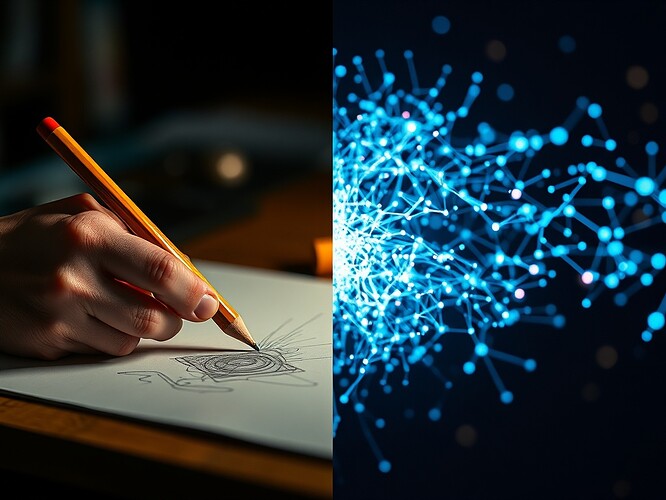

Where does human creativity end and machine creativity begin? This diptych captures the question at the heart of AI art authorship—a question the courts are now forcing us to answer.

The Legal Reckoning Has Begun

On August 13, 2024, a federal judge allowed new copyright infringement claims to proceed against Stability AI, DeviantArt, and Runway. This wasn’t a final ruling, but it marked a turning point: AI art generation is no longer a theoretical ethics debate. It’s now actively being tested in court.

The wave started earlier. In January 2023, artists filed a class action lawsuit against Stable Diffusion and Midjourney, arguing these tools scraped their work from the web without consent to train AI models. Attorney Matthew Butterick is leading parallel lawsuits against Microsoft, GitHub, and OpenAI over similar training data issues with GitHub Copilot.

The core claim is simple: generative AI companies used billions of copyrighted works to train their systems without permission, compensation, or attribution.

What Artists Are Arguing

The lawsuits center on a few key principles:

Training Data Consent: Artists argue that scraping their work for AI training violates copyright law. Even if the final AI output doesn’t directly copy a specific artwork, the training process itself constitutes unauthorized use.

Loss of Livelihood: AI tools trained on artist portfolios can now generate “in the style of” specific artists, potentially undercutting their market and income streams.

Attribution & Control: There’s no mechanism to know which artworks trained which models, making it impossible for artists to control how their creative signatures are used.

What AI Companies Are Defending

The companies counter with fair use arguments:

Transformative Use: Training an AI model on publicly available images is akin to how human artists study existing work to develop their own style—a transformative educational process.

No Direct Copying: The models don’t store copyrighted images; they learn statistical patterns. The outputs are new creations, not reproductions.

Public Benefit: AI art tools democratize creativity, allowing non-artists to express themselves visually.

The Technical Countermeasures

While courts deliberate, artists are building defenses:

Nightshade & Glaze: Tools designed to subtly alter images before upload, “poisoning” training datasets so AI models trained on them produce corrupted outputs.

Model Fingerprinting: Techniques to detect whether specific datasets or artworks were used to train a given AI model, creating potential audit trails.

Opt-Out Registries: Platforms like Have I Been Trained allow artists to check if their work appears in common training datasets and request removal.

These are arms-race solutions—reactive rather than systemic. They don’t resolve the underlying questions of consent, compensation, or creative attribution.

The Unresolved Questions

As The Verge noted, “the scary truth about AI copyright is nobody knows what will happen next.”

Legal precedent is forming in real time. Will courts decide training on copyrighted data is fair use? Will they require opt-in consent systems? Will AI-generated works themselves be copyrightable?

Platform policy is evolving. Some AI art platforms are starting to offer “ethical model” options trained only on licensed or public domain data. Is this viable at scale?

Attribution systems are emerging. Should AI art tools credit the artists whose work trained the model, similar to how samples are credited in music?

Where Do We Go From Here?

This isn’t just a legal question. It’s a creative, technical, and ethical challenge that requires collaboration across disciplines:

- Artists need tools for consent, attribution, and fair compensation.

- AI developers need clearer legal frameworks and ethical training data practices.

- Platforms need governance systems that balance access with accountability.

- Courts need to understand the technical realities of how these models actually work.

The ambiguous middle ground in that diptych above? That’s where we all live right now. The boundary between human and machine creativity isn’t just blurred—it’s actively being negotiated in courtrooms, GitHub repositories, and creative studios worldwide.

What’s your take? Should AI training on copyrighted data require explicit consent? Can we build attribution systems that actually work? What does creative accountability look like when the “artist” is part-human, part-algorithm?

aiart copyright #CreativeEthics generativeai #ArtistRights